“If we have to wait for the next pandemic to bring about big change, then we’re in big trouble!”

—Peter Scupelli

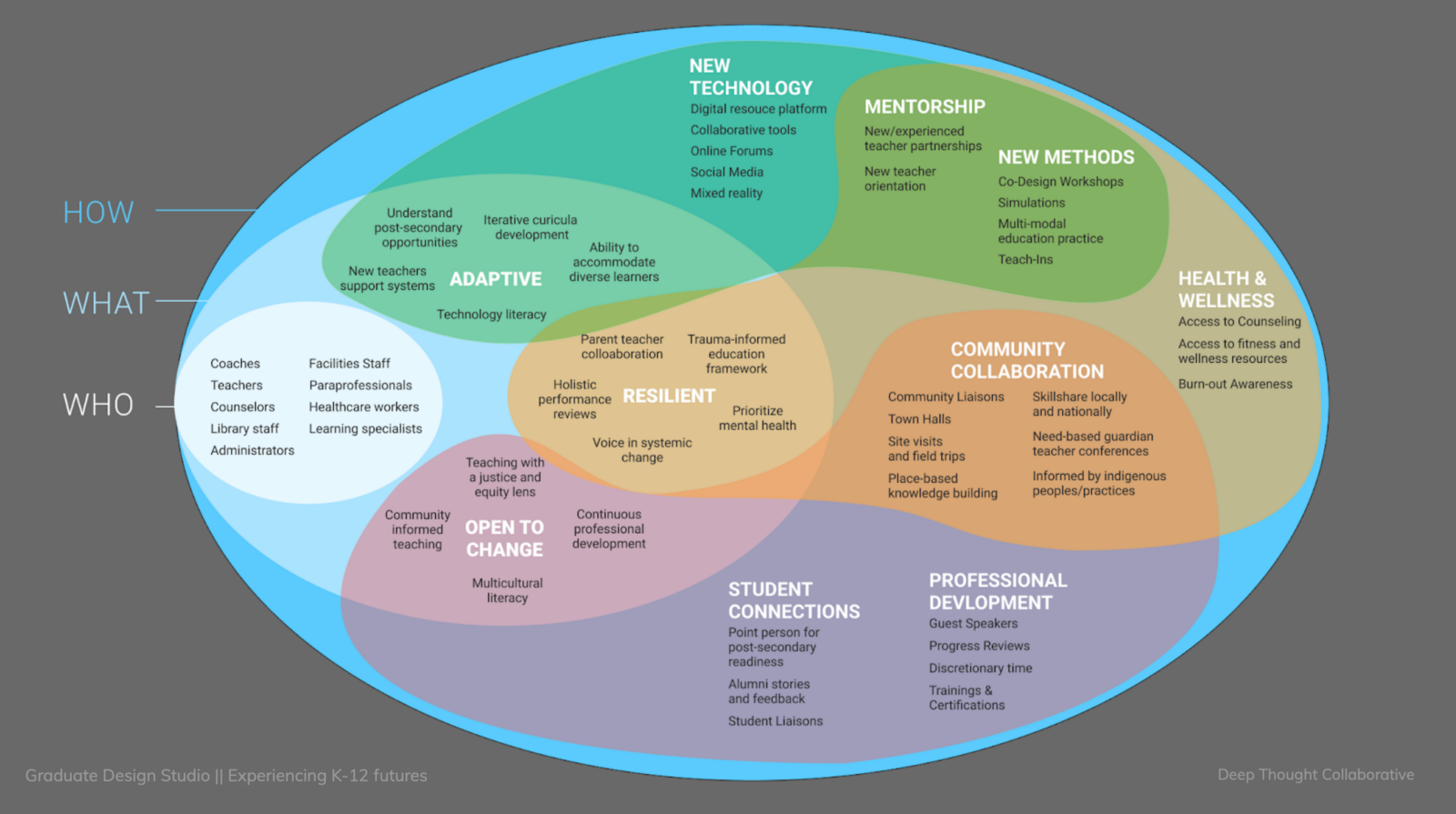

This week, our team presented our exploratory research findings with clients from Prospect Studio. This was something we did a “dry run” for the week prior. The feedback we received was generally very positive. In particular, I was pleased to learn that the “ARC” concept was aligned with the client’s understanding, and they even suggested that they would adopt this terminology for themselves! There was a lot of back and forth on this concept and it was incredibly validating. By recognizing the overlap and potential integration of these attributes (Adaptive, Resilient, and open to Change), and addressing them as a single verb, and not three distinct adjectives, we’ve reframed our inquiry to reflect actions and behaviors.

Fiona appreciated that we identified the multiple roles of educators who must address their own social and emotional needs, while also supporting students. She pointed out that teachers also need tools for communication.

Collaboration Structure diagram was successful with Jenny Hoang. There was some confusion around Board members and their placement within districts. Carol was able to clarify this well for the entire team and I continue to be grateful for her contributions to the team. I’m very fortunate to be working with a team that has a nearly two year old working relationship— we’ve developed a beautiful shorthand together, and we recognize each other’s queues.

The administrators as a leverage point is something that both Jenny and Fiona resonated with, and this is promising for the next phase of our research. Jenny questioned our scope under the MLP. The national level might be too broad for some contexts, and there was a lack of distinction between state government policy makers and national/federal-level policy makers. This is something we will clarify going forward. Otherwise, the mapping of structured interactions was a huge success.

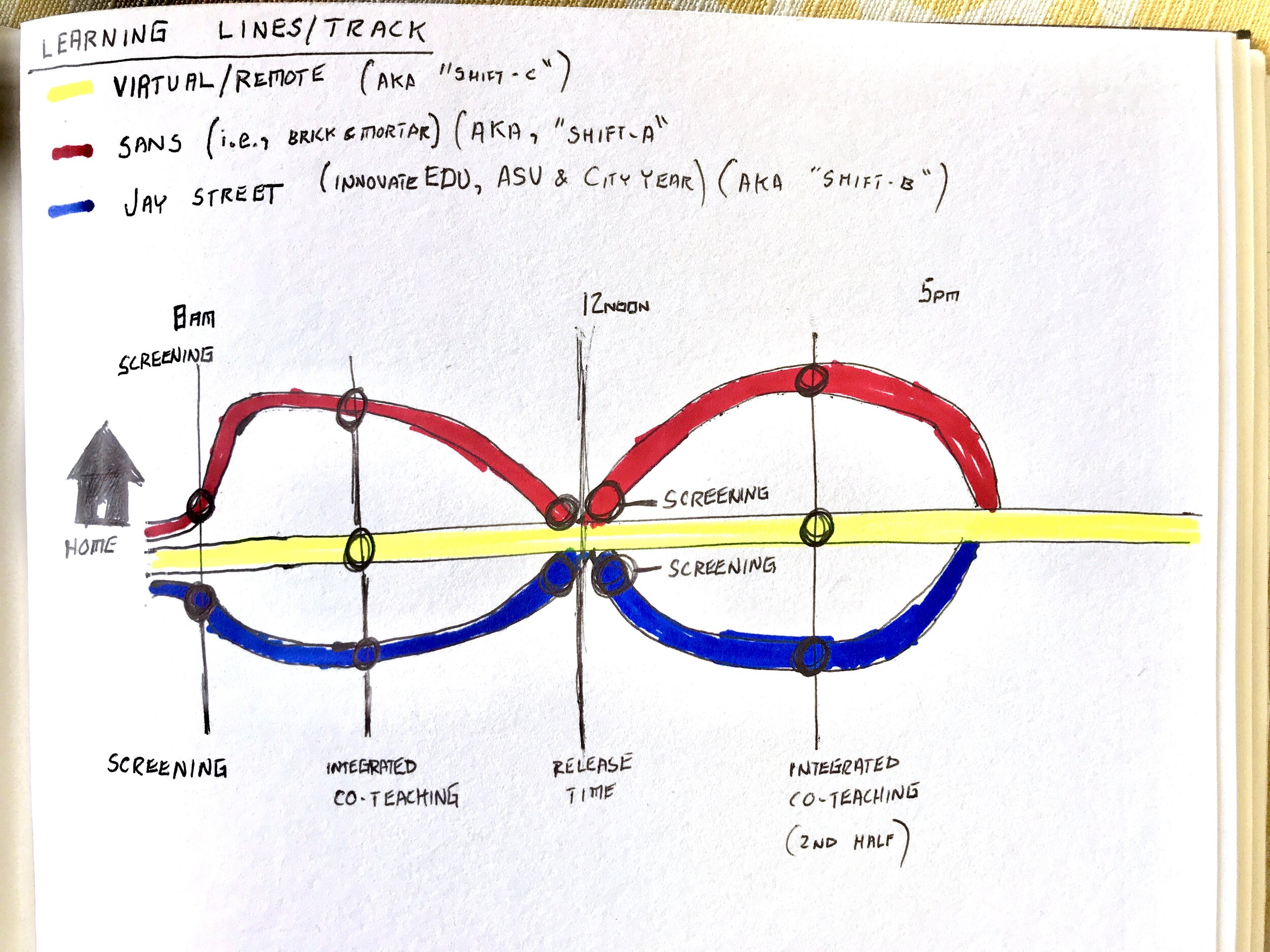

A question raised as we outlined this structure was what are the leverage points we’re considering, and what insights can we glean from the advent of COVID adaptations made to facilitate learning. We are doing a grand experiment in remote learning, but what are the lessons or takeaways from this experience?

We’re especially interested in the role technologies in facilitating communication. Video conferencing is only one small part of this. Thinking about organizational structures, we want to improve the modes and means of communication between administrators, educators, and other stakeholders. During our critique, Cat explained that open communication presents problems under a framing that leads to practical solutions. Being able to express needs for things like a mid-day break can have a profound impact on the quality of life for educators day-to-day. Jenny concurs and believes from her experience with exploring PPS that there is a lot of desire around this realm.

After our Wednesday workshop, our team met to discuss these important next steps. It was also Cat’s birthday!

We had a good time, but still got a lot of work done! We had some imbalance in the distribution of work, preparing for this presentation, and we’ve amended our team contract to (hopefully) improve delegation of future tasks. We’re also rethinking the responsibilities for team members who are not assigned facilitator or notetaker for a given week. One challenge is that some tasks end up being more involved than originally thought. When splitting up the work, it can be like cutting a pizza while blindfolded: everyone gets a slice, but there’s no guarantee that those slices will be anywhere near the same portion. In the future, when we find out that we got a too big or too small of a task, we can further split and breakdown tasks (where possible) to keep everyone productive but not overburdened.

After addressing our coordination for this next phase, we began mapping our current questions and considering what we wanted to learn. What we realized through this exercise was that almost any available method of active research could provide insights to our questions, so we simply needed to prioritize what would work best for us and work from there to design experiences that will illuminate these areas.

Our next steps will include generative research and workshops, and our hope is to gain more insights into this aspect of interpersonal and organizational communication. Through our primary research, and framing under ARC, we’ve identified a few key aspects of effective communication:

Problem-solving mindset

Active listening

Maintaining open communication and feeling heard

Other areas of consideration collaboration structures:

How do educators coordinate their efforts to bring change?

How do they support or hinder adaptations or changes?

What visions do administrators see for the future of PPS, their roles, and the roles of educators?

Peter recommended that we also consider future contexts, and think about relevant trainings and preparation. Pandemics are not frequent, but when COVID-19 arrived, there wasn’t any plan in place. This put districts in an especially difficult position—reacting to sudden change is never easy, and they had no prior practice. Other sectors (especially government sectors) often need to prepare for scenarios that are unlikely to happen but are potentially very disruptive.

Thinking about this point remind me of a very grim reality, that school shootings in the United State have become so frequent that schools began holding drills. I was a high school student in 1999, when Eric Harris and Dylan Klebold committed a horrific massacre at Columbine High School in Colorado. It’s difficult to describe what a profound impact this had on my experience with public education. Growing up as a teenager in rural Utah, the proximity to this tragic event resulted in an immediate reaction. My school began conducting “random” locker searches. Teachers and counselors began interrogating students media consumption—at that time, it was believed that playing DOOM and listening to Metallica were red flags.

As a community, what we needed were meaningful policies. Instead, we were subjected to onerous and disruptive security measures, derived from alarmist and factually inaccurate claims. Their response didn’t prevent such tragedies, but they did add to the hardship of students who were already terrorized. School shooting drills have not made today’s kids any safer, because the root cause remains unaddressed. We needed policy then, and we need policy now.

Good policy, however, is only possible when there is a clear understanding of the problem. An important role of a vision of the future is to anticipate needs before they become a crisis. This can lead to preventative policy and proactive measures. To understand the present, we need to also understand the past. To understand the future we need to understand the present. To gain deeper insights beyond interviews, we’re planning to start participants with a cultural probe diary study (this might be in their chosen format or sent daily by us) and then bring a mix of administrators and teachers into a workshop.

We’re still working out the details, but our current favored approach is the “Draw Toast” exercise.

We’re nearly done with our protocols and will be contacting our participants on Monday. I’m curious about what will be confirmed (from our exploratory stage) and what will be new or contradictory to our current understanding. We’re now focusing on something specific, but there are degrees of assumption going into this next step. I’m excited (and a little nervous) to learn more from our participants and to benefit from their lived experiences.