Late last year I began experimenting with "Vibe Coding" using Claude and MCP (Model Context Protocol) Tools, I've explored this new frontier of human-computer collaboration, where the most critical skill hasn't been mastering syntax or memorizing APIs, but communicating clearly and logically in an ongoing dialog with an LLM.

Read MoreA Bicycle for the Mind

Amid the chaos of a world in crisis, I’ve found hope in an unexpected place: coding. With tools like Claude.ai and MCP, I’ve been building a web app to help food pantries serve their communities better—automating inventory, breaking language barriers, and streamlining processes. This isn’t just about code; it’s about turning anxiety into action, using technology to create something meaningful. If you’ve ever wondered how AI can amplify human effort, this is a story for you.

Read MoreLearning Through Building: Adding Sort & Translation Features to a Food Pantry Web App

Today was a journey of wins and "learning opportunities" as I worked on improving William Temple House’s food pantry management system. The morning started great - implementing sortable table headers was surprisingly smooth — I’m continually impressed by the coding capabilities of Claude.ai. Working with both Claude and ChatGPT, we created a modular code structure, which made it easy to add sorting without breaking existing features.

But then came the interesting part. When I began tackling multi-language support, my API requests to OpenAI exploded into the tens of thousands. The goal seemed simple: translate food items into our client community's languages. William Temple House serves many non-English speaking clients: Spanish, Russian, Ukrainian, Chinese, Arabic, etc. I’ve integrated OpenAI's gpt-4o-mini API for translations, and everything worked beautifully... until it didn't.

Turns out a rate limit is easier to hit once the database of food items reaches critical mass. Who knew sending thousands of translation requests over a period of just a few hours would hit OpenAI's daily cap? Probably everyone who bothered to read the documentation first. :-)

It's actually kind of funny watching the error logs fill up with "please try again in 8.64 seconds" messages. Claude as an AI coding assistant was invaluable throughout this process. When I got stuck on implementing sort functionality, it helped refactor the code while maintaining the existing event-driven architecture. Later, when we hit the translation rate limits, it suggested implementing batch processing and queuing systems - solutions I wouldn't have considered immediately.

Key takeaways:

Small wins matter: The sorting feature works great in my Test UI

Read API docs before sending 10,000 requests

Sometimes the best solution is to wait 24 hours for rate limits to reset; I should be taking a break on a holiday weekend anyway

Having an AI pair programmer helps spot potential issues before they become problems

Next steps? Take a brief pause while OpenAI's rate limit resets, then return with a smarter approach to batch translations. As frustrating as the rate limit issue was, it's pushing us to build a more robust system.

I’m going to use some of this down time to reflect more on the process of building this system. I’ve learned a lot about how best to leverage AI for software development, and hope others will benefit from what I’ve found along the way.

Video Lecture: 3D Modeling Basics for Beginners – Techniques, AR Tips, and Intro to AI Tools

I have some exciting news! October 23rd, 2024, I was once again invited to guest lecture at CMU School of Design. I decided to follow up with a recorded version to share. In this recording, made after the original lecture session, I cover the essentials of 3D modeling with a focus on beginner-friendly techniques. You'll find practical insights into mesh modeling, workflow tips for Blender, and an introduction to preparing models for augmented reality. The full lecture video is embedded below, followed by detailed notes that offer a step-by-step breakdown of theory and techniques for anyone new to 3D design. Dive in, explore, and start building your own 3D modeling skills.

Principles of Mesh Modeling

Note on Mesh Modeling Focus—Or Why This Lecture Focused Primarily on Mesh Modeling:

Meshes are the standard 3D model type used in real-time 3D engines—like Unity, Unreal, and virtually every AAA video game title in the last 30 years, going all the way back to Quake, by id Software in 1996.

Key Principles:

Use Quad Faces Whenever Possible: Design your shape faces with quads instead of triangles and ngons.

Reason: Quads are infinitely divisible, making it easier to adjust geometry resolution as needed. Tris and Ngons are not as flexible, which can lead to undesirable artifacts and poor topology.

3D games primarily use triangles (tris) instead of quads because triangles are the simplest polygon shape and always planar (a flat surface), making them computationally faster to render in real-time on limited hardware, which was crucial for early gaming systems underpowered computer hardware. Essentially, triangles require less processing power to calculate and display on screen compared to quads, which have more vertices and edges.

On modern computer hardware we can get away with more complex geometry, and it's generally a better trade-off to build mesh models from quads. That is, the computational costs are vastly outweighed by the benefits of evenly divisible face geometry and more manageable topology. Lastly, quads are easily converted into tris, by producing diagonal edges between the four vertices.Work from the Lowest Possible Polygon Count: Always start with the lowest polygon count (i.e., resolution) for your model. You can increase resolution later with subdivision modifiers, but it's not as easy to reduce the resolution later.

Reason: Editing a high-resolution mesh is more difficult than working with a low-resolution one, which offers greater control and flexibility. It also takes much more processing power and memory, which will slow down Blender and increase the risk of crashes.Keep Base Shapes Simple: Keep your base shapes as simple as possible. When adding details, create those elements as separate objects. When you hit a milestone, consider duplicating a model or a collection of models to a new instance for further refinement.

Reason: This approach makes 3D modeling more manageable, allowing for easier adjustments and maintaining clean geometry.Use Modifiers and Non-Destructive Editing Whenever Practical: Designing a symmetrical shape? Cut it in half and use a Mirror Modifier to cut your editing time in half. Keep in mind that the most complex designs can ultimately be derived from very basic shapes: Spheres, Cones, Toruses, and Cubes.

Work From Reference Images, Even If Just A Few Basic Sketches: Press Shift + A to open the Add menu. Navigate to Image > Reference. Select the image file you want to use from your computer. The reference image will be added to your 3D Viewport, where you can position, scale, and rotate it as needed for your modeling task.

Build The Overall Form First, and Then Separate into Smaller Objects: This will ensure that your designs are cohesive and edges are properly aligned. When you're ready to divide into separate objects, duplicate the objects into a new Collection.

Experiment, Tinker, Explore, and Start Over: You're unlikely to get the design right on the first attempt. It's often necessary to work through the problem, and then start over from scratch once you've had enough time to explore the form. Reason: Your second draft will almost certainly be better than the first.

Blender Quality of Life Recommendations:

Save Your Project Files Early and Often: Use Blender's "Save Incremental" (⌥+⌘+S) (Option + Command + S) to manage version control. Doing this will give you the freedom to fearlessly tinker and explore (as mentioned in the previous point) before settling on a final design.

Crank Up The Number of Undo Steps: Open Edit from the top menu. Select Preferences to open the Blender Preferences window. In the Preferences window, click on the System tab. Scroll down to find theUndo Steps setting.

Increase the value (the default is 32). If you have enough system memory, set it to 256 for more flexibility in undoing actions. Close the Preferences window to save your changes.

Consider Using A Material Library: Blender has a basic built-in material library, but it's not very useful. Look into large libraries, such as PBR Material Asset Library + OneClick Add-on for Blender (https://shardsofred.gumroad.com/l/CfOnY). Creative Commons License (CC0) materials can be used for basically anything, and will save you time.

Remember to Perform a UV Unwrap on Your Model Geometry for Best Results When Texturing: The most realistic textures in the world won't help you if your model doesn't have good UV Mapping. Remember the chocolate Santa Claus example? Proper wrapping is essential for creating realism with your models. https://docs.blender.org/manual/en/latest/ modeling/meshes/uv/applying_image.html

Recommended Extensions and Add-ons:

VDM Brush Baker: Helps you create and bake Vector Displacement Maps directly in Blender.

Bool Tool: Boolean operations for complex shape creation.

Node Wrangler: Enhances node editing management.

Rigify: Automated rigging solution for character animation.

Loop Tools: Useful for organic modeling (with some bugs appearing

in Blender 4.2—be sure to keep this add-on updated!).

Other Useful Add-ons: Auto Mirror, F2, Extra Mesh/Curve Objects, Extra

Grease Pencil Tools, Copy Attributes Menu, and MeasureIt.

Bonus: Need furniture? Most of IKEA's catalog of products have 3D models available. Search for "IKEA" under Extensions and you can easily search and import 3D models into your scenes.

Note: Ensure 'Allow Online Access' is enabled in Blender's System Preferences for add-on updates.

Create Augmented Reality Experiences for iOS with Xcode Developer Tools, Reality Composer, and USDZ File Format

Once you've finalized your form, added necessary details, and applied your materials, you should be ready to export your model.

Step-by-Step Instructions for Preparing 3D Assets for Export to USDZ:

Duplicate Your 3D Assets and Collections: Create a new instance of your 3D assets specifically for export.

Apply All Transforms: Hit A to select all visible objects, then press ⌘ + A (Command + A) and select All Transforms to apply.

Apply All Modifiers: Apply all modifiers in the same order they were added to each model—except for subdivision, as tessellation data can (usually) be included without applying it directly to the models.

Join All Components: Hit A to select all visible objects, then press ⌘ + J (Command + J) to perform a join operation.

Export the File: Go to File > Export > Universal Scene Description (usd*).

Configure Export Settings:

Include: Check Visible Only and Selected Only.

Blender Data: Select Custom Data.

Namespace: Use the default setting (UserProperties).

Blender Names: Enable this option.

File References: Set to Relative Path.

Convert Orientation:

Z = Forward Axis

Y = Up Axis

Note: Many other 3D tools, including Xcode's tools, interpret 3D models with a different axis orientation than Blender. If you don't apply this conversion, you'll find your model improperly rotated following import. If this happens to you, double-check these settings.

Use Settings for Render: Enable this option.

Object Types: Select Mesh, Volumes, Curves.

Geometry: Enable UV Maps, Rename UV Maps, Normals.

Subdivision: Set to Best Match.

Rigging: Enable Armatures (if you have rigged and animated your

model).

Materials: Select USD Preview Surface Network and Export Textures.

USDZ Texture Downsampling: Set to 1024px or up to 2048px (the

largest size acceptable for iOS QuickLook).

Update File Extension: Change the export file name extension

from .usdc to .usdz.

If no issues are encountered after export, you should be able to view your model in Augmented Reality on any iOS device. Open your exported file from iCloud, send it as an email, text, or AirDrop to another device to view.

Setting Up Xcode and Reality Composer:

The latest version of Xcode doesn't include Reality Composer, as Apple has shifted their focus to the Vision Pro. You can still access the Augmented Reality Tools for iOS devices, with some additional steps.

Step-by-Step Instructions:

Download the Latest Version of Xcode 14: Download from the provided

link: https://developer.apple.com/download/all/

NOTE: You'll need to create an Apple Developer Account (it's free) to access the above link, or using this direct link: https://download.developer.apple.com/Developer_Tools/Xcode_14.3.1/Xcode_14.3.1.xip

Extract and Rename The Older Version of Xcode: Rename Xcode.app to Xcode14.app and place it in your Applications folder.

Open Terminal on Your Mac.

Open the Applications Folder in Finder.

Drag the Xcode14 App into Terminal: This will automatically add its path.

Add to the Path: Next to the path, add: /Contents/MacOS/Xcode.

Full Command Example: The command will look like:

/Applications/Xcode14.app/Contents/MacOS/Xcode

Run the Command: Press Enter to run the command.

You should now have access to Reality Composer in Xcode. Click on the Xcode menu on the task bar, then click Open Developer Tool, and then click on Reality Composer.

Learn more about using Reality Composer here: https://developer.apple.com/documentation/realitykit/realitykit-reality-composer

Learn more about Apple Reality Kit and ARKit here: https://developer.apple.com/augmented-reality/tools/

BONUS: Generative AI and 3D

Tripo AI (https://www.tripo3d.ai/app) is an advanced generative AI tool that allows for both text-to-3D and image-to-3D model generation. This tool offers users an intuitive way to create complex 3D assets with minimal manual input, simply by describing what they need or providing a reference image.

Key features:

Text-to-3D and Image-to-3D Conversion: Users can input a detailed description or upload an image, and within seconds, the AI generates a draft model ready for refinement.

Prompt: "A pineapple-hedgehog with spiky fruit armor and leafy quills."

https://tripo3d.ai/preview?share=9a57357e-6262-469c-afb1-c7af74d92c93

Prompt: "A 1980s sci-fi robot stylized as a Nintendo NES product."

https://tripo3d.ai/preview?share=a08a55cd-9e66-48a5-be3d-85a26160e461

High-Speed Generation: Tripo’s AI processes are optimized for efficiency, allowing users to generate detailed models in a matter of seconds, ideal for prototyping or quick visualizations.

Customization Tools: After generating a model, users can adjust topology for increased details, or apply stylization, such as voxels.

Seamless Integration: Tripo3D supports a variety of export formats like .usdz .obj and .fbx, making it easy to import models into Blender and other software for further editing.

Generate full texture maps with PBRs: includes generation of PBR textures, adding even greater details beyond the geometry.

Automatic rigging and basic animations: Applies a basic animation rig to generated models and simple animations, such as a running character, to the model geometry.

Downsides:

Imprecise generation: just like AI image generators, results are unpredictable and often wrong.

Costs: Using this tool will require a membership plan, and has limited monthly credits, which limits usage.

CREDITS:

Thanks to all of these wonderful educators and content creators who continue to inform and inspire me throughout my 3D journey. Preparing this lecture required lots of time and consideration for how to condense what I’ve learned over the last five years into something I could demonstrate in under 2 hours. This wasn’t easy, but I had many fantastic resources to pull from.

If I’ve left anyone out, please leave a comment so I can include them here:

Ashley Deal and Raelynn O'Leary — CMU School of Design Faculty and Founding Partners at Dezudio http://www.dezudio.com

Phil Eichmiller — Principal Software Engineer at Autodesk: https://blogs.autodesk.com/community-journal/2022/04/26/meet-phil-eichmiller-principal-software-engineer/

YouTube Creators:

Reference Files:

Robot model created with Tripo AI

Robot model with corrected orientation

Note: Due to a bug, the robot walking animation doesn’t playback in QuickLook AR for iOS.

TUTORIAL: How to use ultra realistic Quixel Mixer materials with Fusion 360 [Part 2]

Welcome back! In Part 2, we’ll explore adding Quixel Materials to your designs in Fusion 360 and setting up a rendering scene. If you haven’t already, review Part 1 and install Quixel Mixer. You’ll want to create and export a mix for use in Fusion 360 prior to the steps in this tutorial, or download an example material set here.

First, let’s create a new project in Fusion 360:

Creating a new Fusion 360 Project

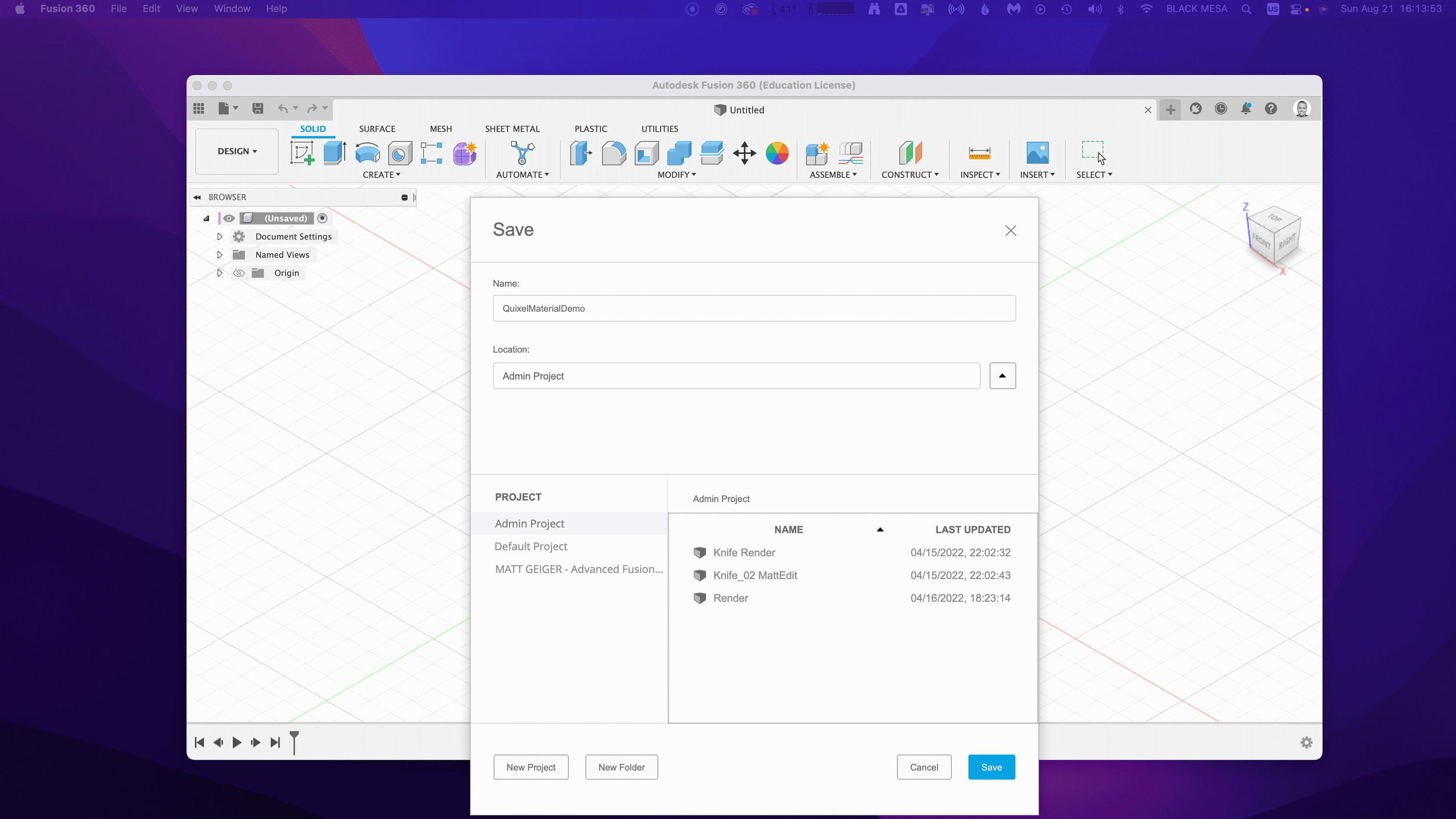

After you open Fusion 360, Click “Save” and give your project a name. In this example I used “QuixelMaterialDemo.”

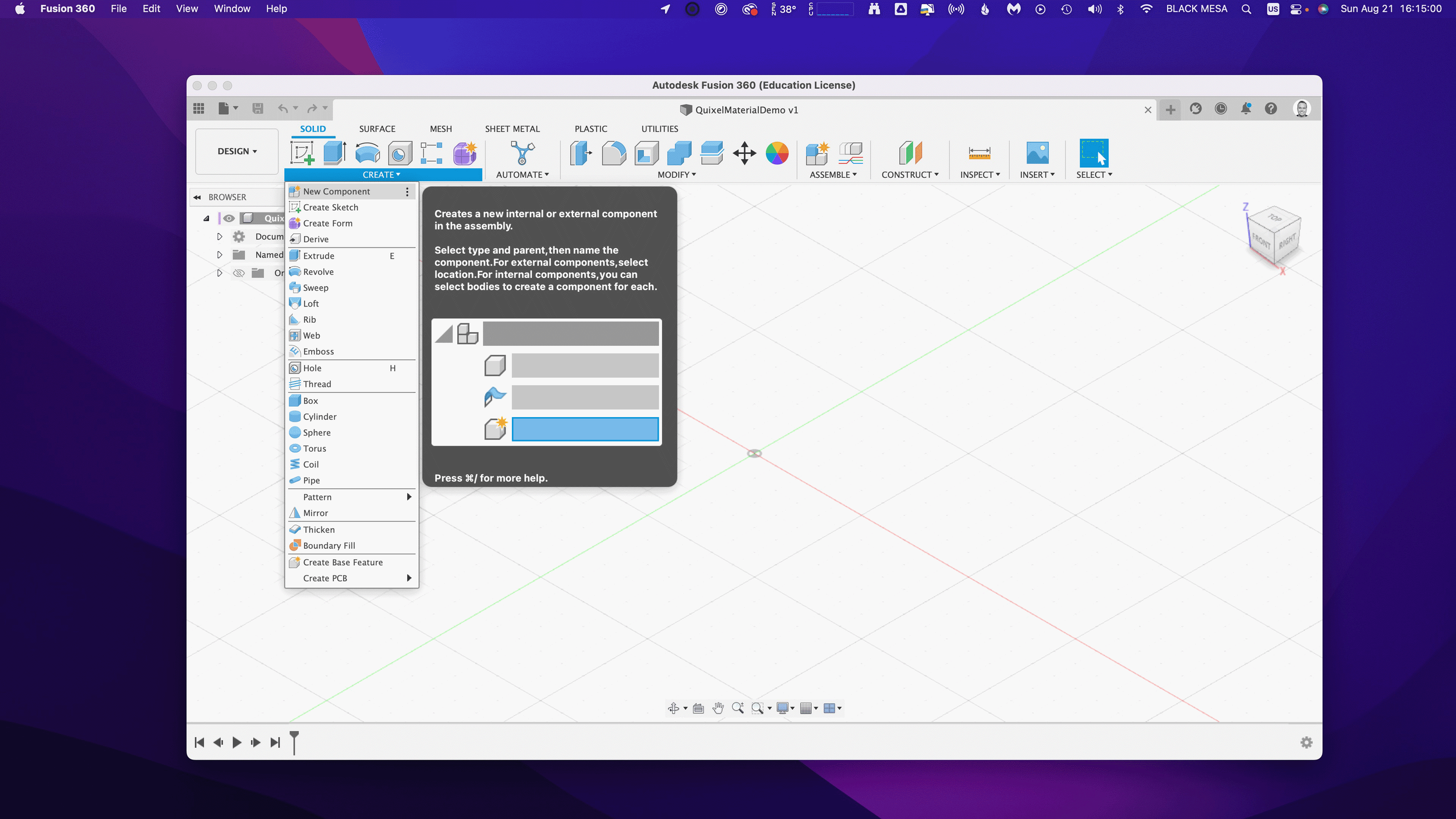

After you save your project, we’ll want to create a new component and make it active.

2. Create a new Component

This is generally a good practice with Fusion 360, because we can more easily manage changes made to the design when the timeline is broken up by individual component histories. Name your component “Floor” and then make sure “Activate” is selected (should be by default), click “OK” to continue.

Next, we’ll want to create a sketch to define the floor’s dimensions. Click “Create” and make a Center Rectangle on the bottom plane.

3. Create a Floor

Make your sketch 3 meters x 3 meters in size, with the Origin at the center. Click “Finish Sketch” to continue. If you’ve done everything right, then you should have a sketch that is fully constrained (i.e., you’ll see black lines instead of blue lines for the outer dimensions of your sketch).

Next, we’ll extrude the sketch below the plain. This will create a new body, based on our sketch dimensions.

Click Create and then Extrude. Then, extrude the sketch -1mm below the plane and click “OK.”

Next, Save the design. You’ve created your first body and now would be a good time to save your progress.

Note the reason for your save and Click “OK.”

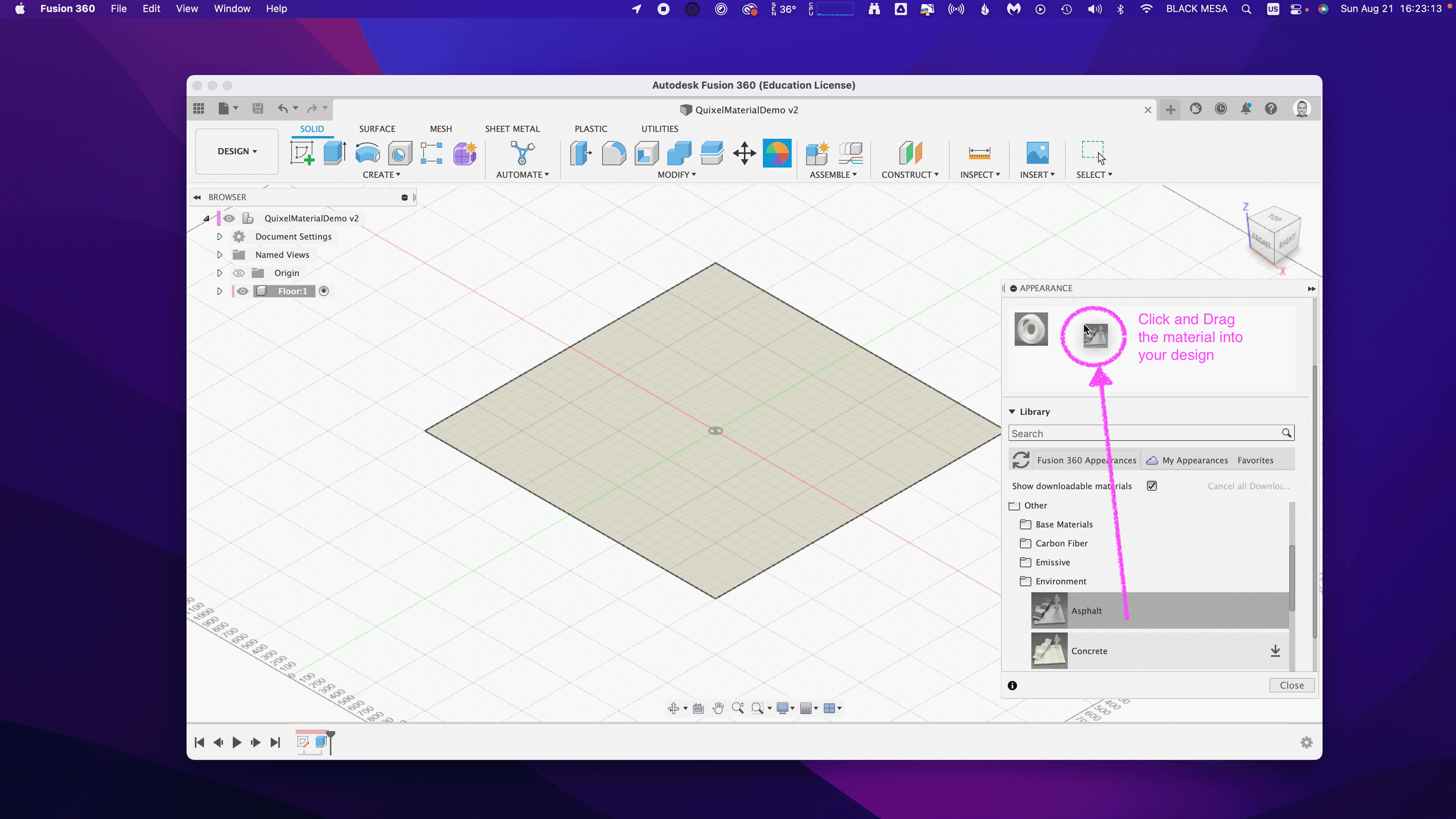

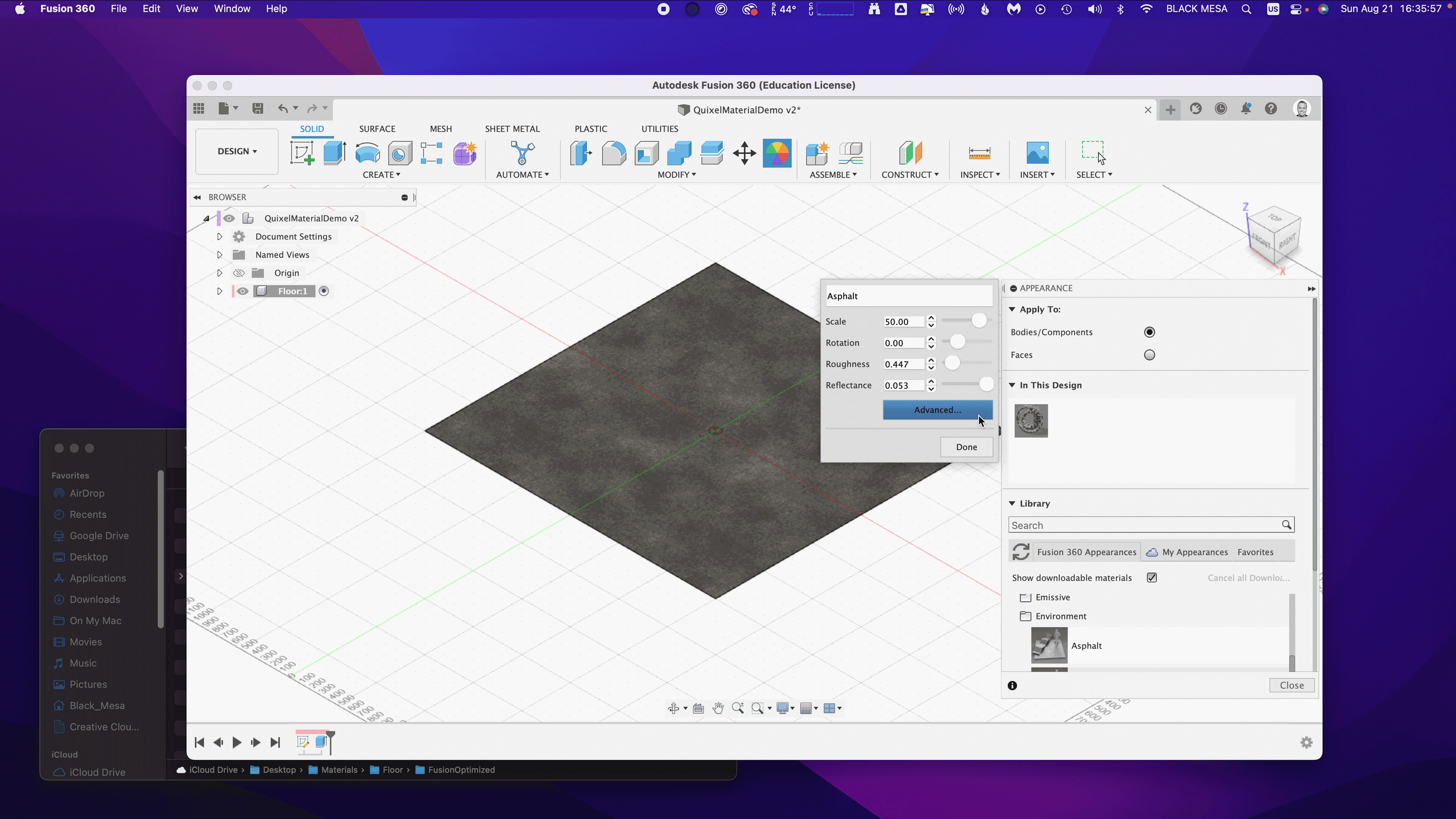

Next, we’ll want to change the Appearance of our floor. Click Modify Appearance to bring up the Appearance Window.

4. Add material

Here we can see the default material for the Floor body. We’ll want to replace that material with our Quixel Mix. To do that, let’s start by downloading a similar material.

Note: in general, you’ll find it is easier to add Quixel Mixer materials when you adapt an existing material in Fusion 360 with similar attributes. In this case, we can use the existing Asphalt Material.

After the download finishes, click and drag the Asphalt material into your design.

We can then replace the default material with the Asphalt.

5. Replace Fusion 360 Material with Quixel Mix

Next, we can begin modifying the Fusion 360 Asphalt material with the Quixel Mix.

As mentioned in Part I, the materials in Fusion 360 are made up of individual map image files:

Albedo/Diffusion/Color — the color a material reflects

Normal and/or Height Maps — the bumps and imperfections along a surface

Roughness — the smoothness of a surface (ranging from a sharp reflection to fuzzy/diffuse)

Reflectance/Specular/Metalness — the reflectiveness of a surface (ranging from mirror finish to a dull surface)

Anisotropy/Ambient Occlusion — the shadows along a surface

Refractive —how light bends through a surface

Emissive — how much light a surface emits (glow)

Translucency/Opacity — how transparent a surface is to light

If you’re using the included sample images, you’ll find some but not all of these maps. Depending on what materials you’re mixing, you’ll need different image maps. The sample image package includes:

Floor_Diffuse.png — Color (placed in Parameters)

Floor_Roughness.png — Roughness (placed in Parameters)

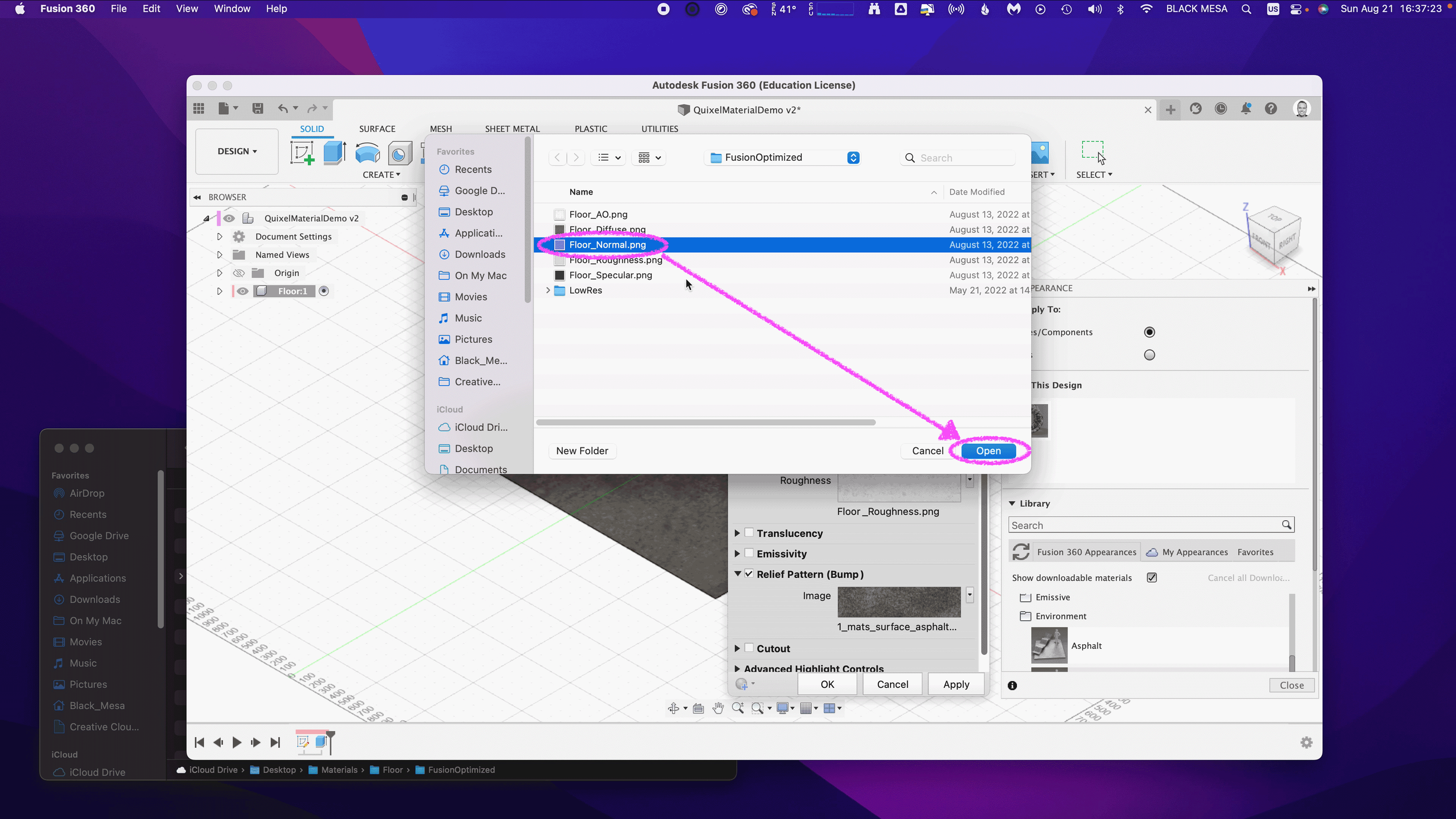

Floor_Specular.png — Reflectance (placed in Parameters)

Floor_Normal.png — Normal (placed in Relief Pattern (Bump))

Floor_AO.png — Anisotropy (placed in Advanced Highlight Controls)

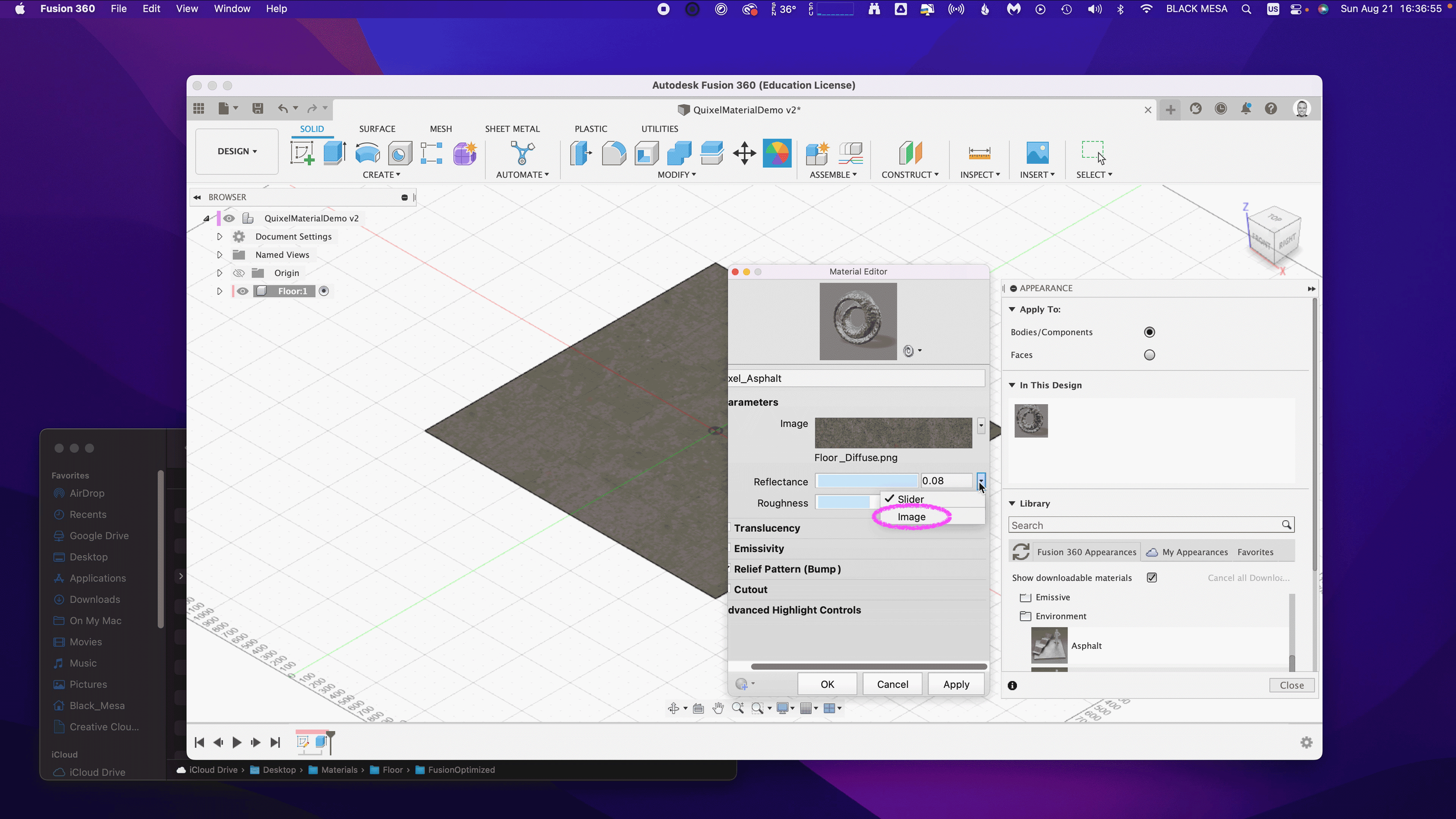

By replacing and adding these map files to the Fusion 360 Asphalt material, you can transform it to the Quixel mix. To start this replacement process, open the Appearance window, double-click the Asphalt material and then click “Advanced…”

Rename the material to “Quixel_Asphalt” to distinguish the material from the original Fusion 360 Asphalt.

Under Parameters, we can add three (3) image maps. First, we’ll apply the diffusion/color map to the Image input in Fusion 360. Click on the Image filename 1_mats_surface_asphalt_color.jpg and navigate to your replacement images.

Select your Albedo/Color/Diffuse map file. If you’re using the sample images, it’s the file named Floor_Diffuse.png. Click Open to replace the default image file.

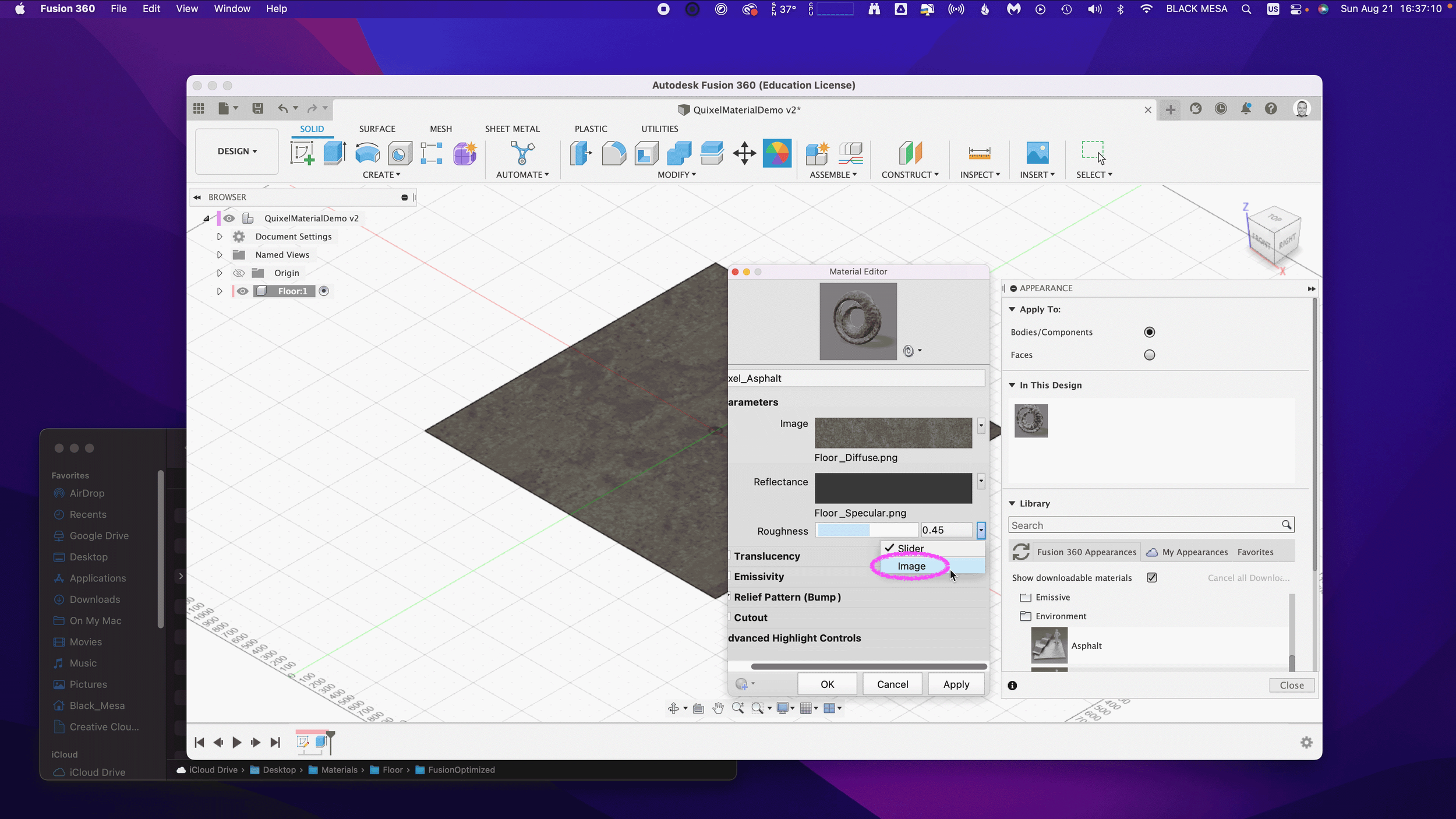

Next, we’ll repeat the process with the Reflectance and Roughness maps. By default, these two material attributes are set as Slider values, click the drop down arrow and then select Image to replace the slider value with an image map.

Next, select the Metallic/Specular image map if you’re using the sample images, select Floor_Specular.png and click Open.

Next, repeat the same steps for the Roughness value. Select Image and then select your Roughness Map. If you’re using the sample images, select the Floor_Roughness.png.

Now that we’ve completed the three Parameter maps, we can move on to the Relief Pattern (Bump) map. Once again, we’ll replace the default image file (1_mats_surface_asphalt_.jpg) associated with the material. Note: Fusion 360 supports both bump and normal maps. If you want to know more about these two approaches to texturing a 3D model, then click here.

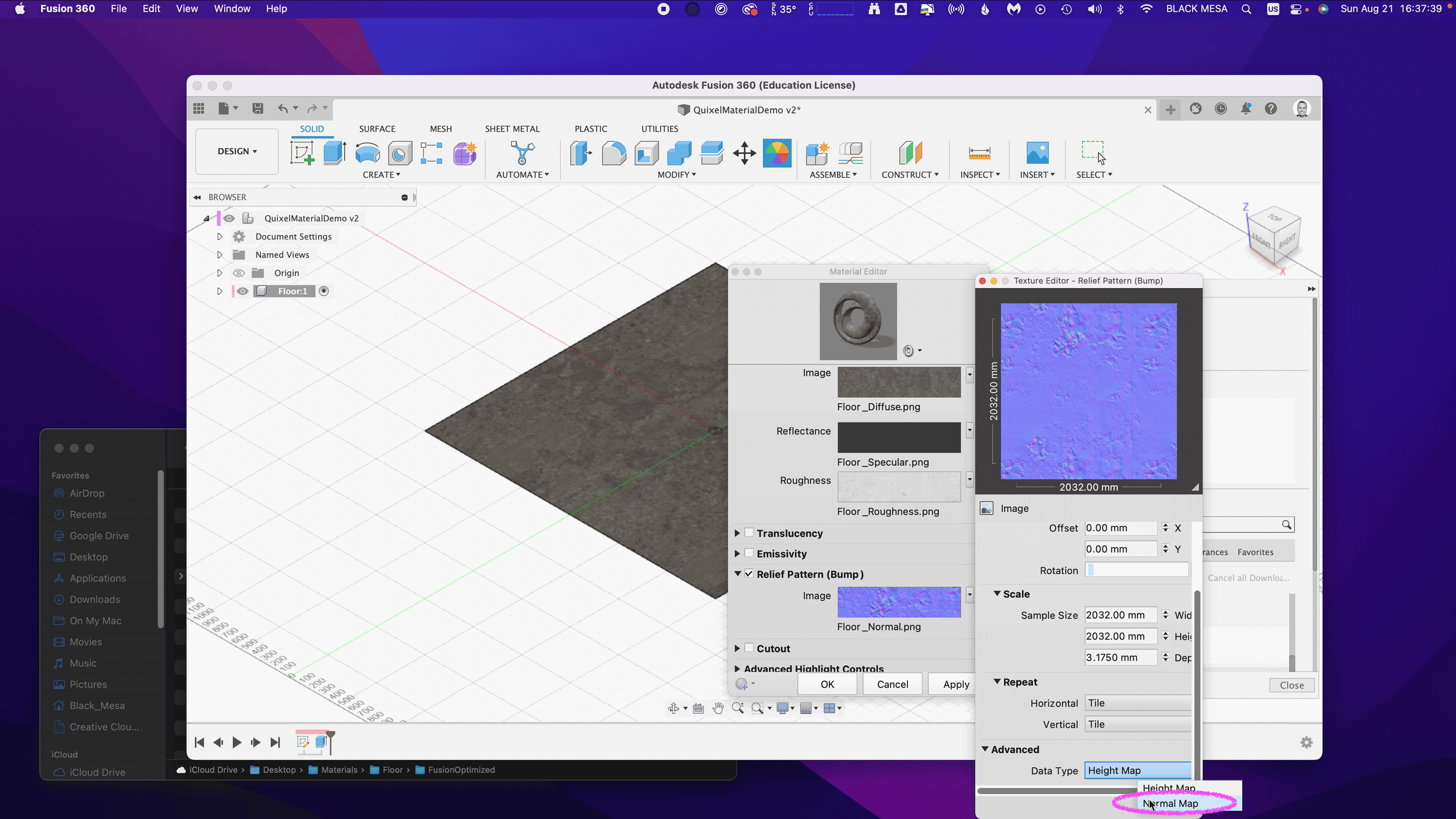

Next, we need to change the Relief Pattern from a Height Map to a Normal Map. To do this, we need to Edit the image.

Next, scroll down to Advanced and change Data Type to Normal Map.

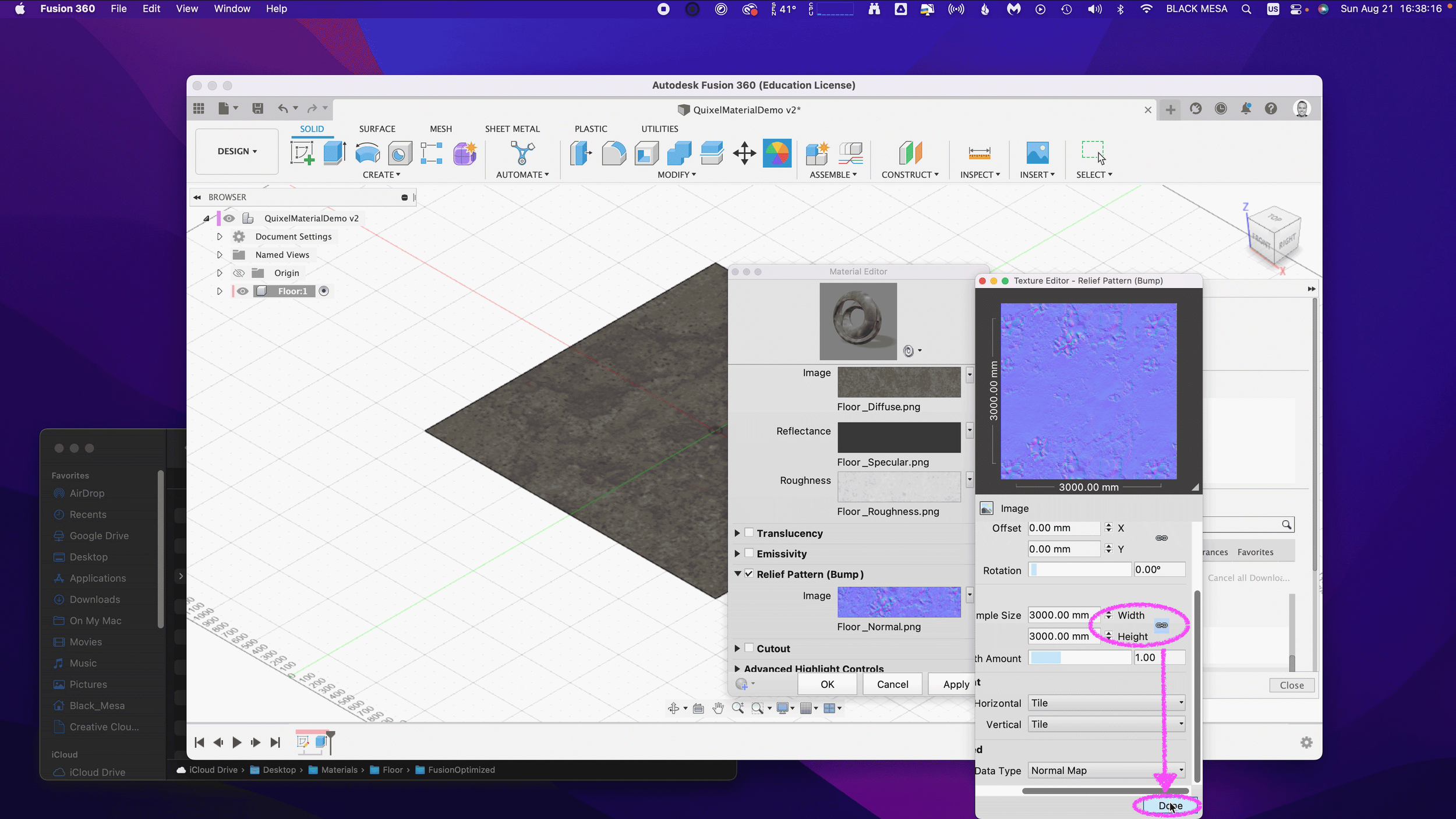

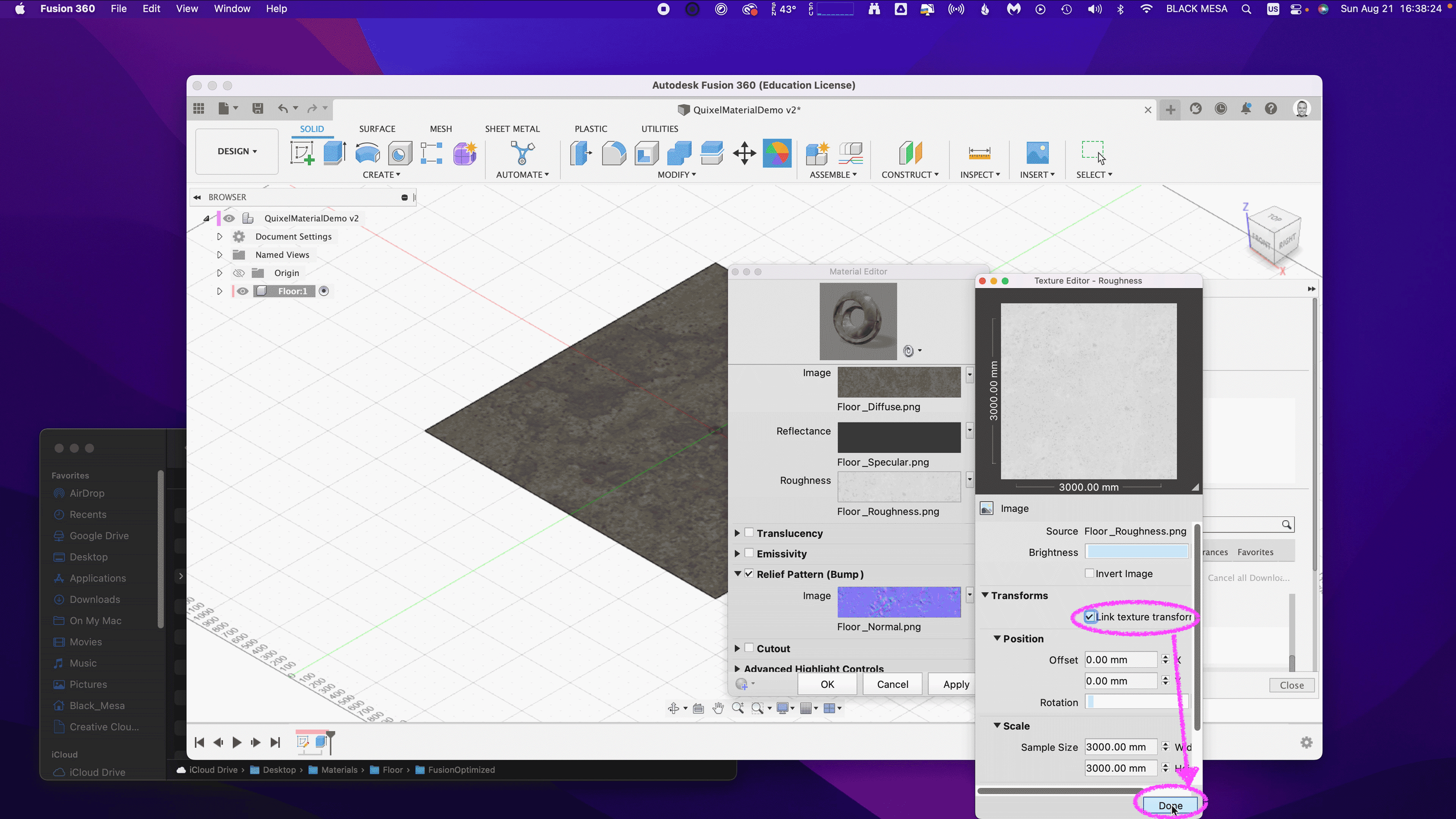

Next, we need to ensure that all of our maps are using the same Sample Size. Be sure to repeat this step for all image maps. We also need to ensure that all of our Maps have Linked texture transforms. Check the Link texture transforms under the Transforms section of the Texture Editor. Be sure to repeat this step for all image maps.

These steps are important, because they ensure that all of the image map data are aligned equally to the material in Fusion 360. After you’ve verified these settings, you can click “OK” to finalize the changes to this material.

Now that the material has been updated you can Close the Appearances window.

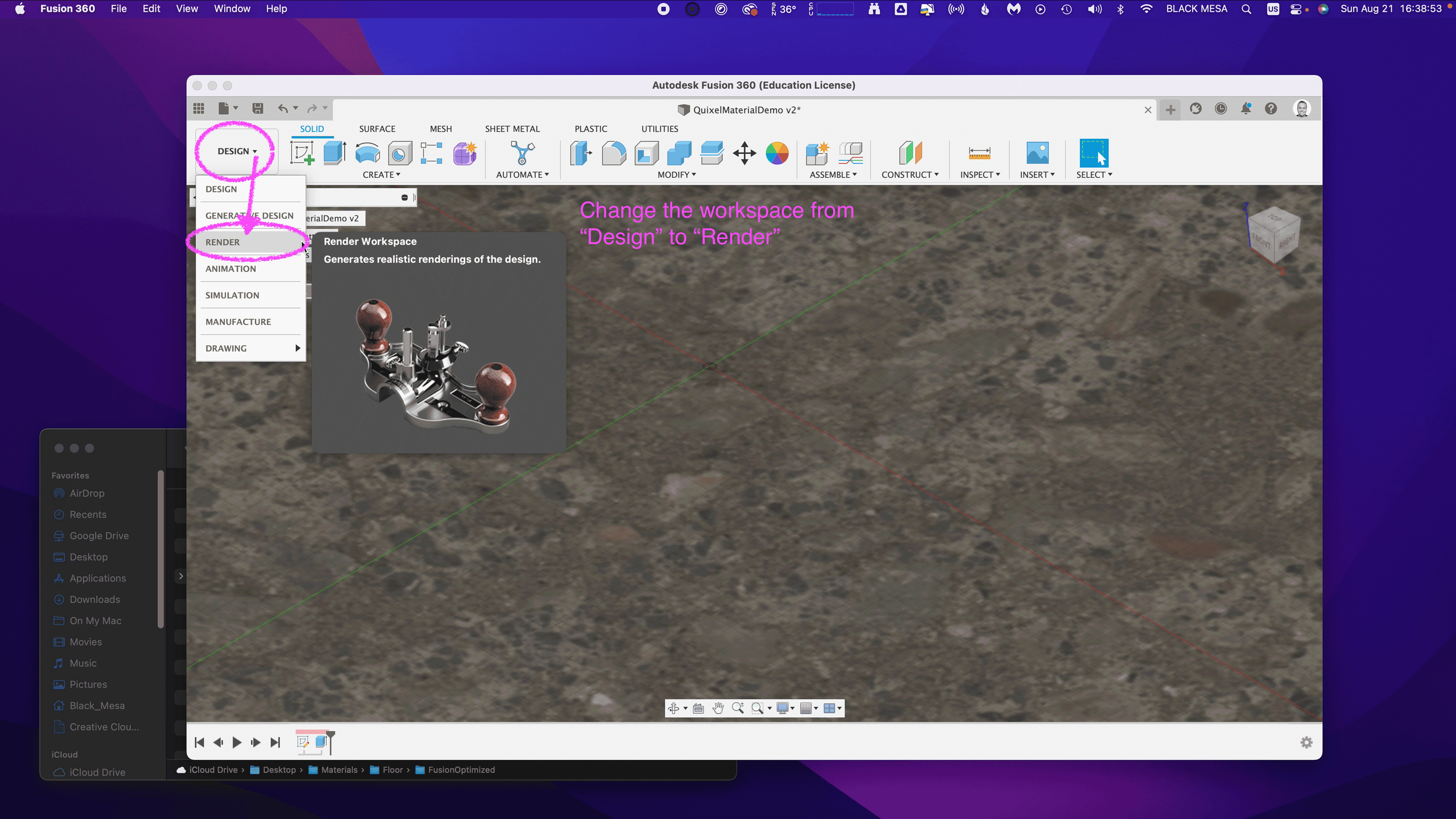

To check and validate our new material, we need to switch to the Render Workspace in Fusion 360. Click on the Workspace button, and change it from DESIGN to RENDER.

6. Test render scene

Next, let’s save the design to capture the new material settings in your Fusion 360 Timeline. Click File and Save.

Fusion 360 will prompt you to describe your save point. Let’s name this save “Quixel Material Added” and click OK.

Before we can test our new material, we need to edit the SCENE SETTINGS from the SETUP Menu. Open the SCENE SETTING Window and Click+Drag “Dry lake bed” to the Current Environment and then Click Close.

We also need to change the IN-CANVAS RENDER settings to FAST, so that we can easily see the material’s performance during rendering. To do this, click on the IN-CANVAS RENDER SETTINGS icon and Click on the Fast tab. Then, Click OK to update the rendering method.

Next, we can preview the rendering, and see how the various maps work together under different lighting conditions. To do this, start the In-Canvas Rendering and then open Scene Settings, click on the Position Icon to bring up the Rotation and Scale Sliders. By changing the rotation, you can see how the surface of your floor object casts shadows at different angles, corresponding to the surface material.

Make sure to save your project to retain your rendering settings. If you’ve made it this far, then congratulations! You now have all of the information necessary to import Quixel Mixer materials in Fusion 360. In Part 3, we’ll explore some techniques for applying these materials to complex geometries, and how to post-process your images for additional realness. In Part 4, we’ll take these realistic models and generate Augmented Reality experiences for iOS.

Stay tuned!

Sample models textured with Quixel Mixer PBR materials, rendered with Fusion 360

TUTORIAL: How to use ultra realistic Quixel Mixer materials with Fusion 360 [Part 1]

Picatinny Rail set with US quarter dollar coin for scale, Quixel PBR materials rendered with Fusion 360

Want to bring your Fusion 360 designs to life? Then let’s take a look at a wonderful alternative to Autodesk’s built-in materials library. First, what does Fusion 360’s material library look like?

ANATOMY OF FUSION 360 PBR MATERIALS

Before we can explore alternatives to Fusion 360’s included materials, we need to understand what elements these materials are made from. PBR (Physically Based Rendering) materials are built from multiple images:

Albedo/Diffusion/Color — the color a material reflects

Normal and/or Height Maps — the bumps and imperfections along a surface

Roughness — the smoothness of a surface (ranging from a sharp reflection to fuzzy/diffuse)

Reflectance/Specular/Metalness — the reflectiveness of a surface (ranging from mirror finish to a dull surface)

Anisotropy/Ambient Occlusion — the shadows along a surface

Refractive —how light bends through a surface

Emissive — how much light a surface emits (glow)

Translucency/Opacity — how transparent a surface is to light

We can access these different elements through the Appearance window in Fusion:

Preview image for Part 2 of this tutorial, where we’ll go over importing materials to Fusion 360.

Fusion allows you to modify existing materials through two primary mechanisms: Sliders and Images.

Elements of material can be controlled with Slider values or Images (i.e., material maps)

Sliders apply a value to the entire surface, whereas images allow for a per-pixel value (maps). Quixel Mixer generates images for their materials, and this is what allows us to apply a Quixel material to a Fusion 360 model.

INSTALLING QUIXEL MIXER

Quixel Mixer is a free to use materials tool for use with Epic Games’ Unreal Engine. Technically, their EULA limits using their materials for Unreal Engine only. However, there’s no reason why you could not import models from Fusion to Unreal (as an Autodesk .FBX file export), so it’s valid (in my opinion, and I’m not a lawyer) to preview materials in Fusion’s rendering engine prior to exporting to Unreal. Let’s install Quixel Mixer!

Go to quixel.com

Next, scroll down to “Download.”

After the download finishes, open the installer.

Click next, and you’ll see an option to install various material packs. Just like with Fusion 360’s material library, you can always install additional materials as needed. Click next to continue with the basic “Sample Mixes” pack.

Leave the default directory names suggested and click next until you reach the download screen. Depending on your internet connection and disk read/write speed, this will take quite some time. Get yourself a coffee or a beer, maybe take a walk if you’ve opted to download the rest of the material packs.

After the download finishes, Launch Quixel Mixer!

You’ll be presented with some sample mixes, feel free to explore these, and when you’re ready to proceed, let’s make your first mix.

2. CREATING A NEW QUIXEL MIX

Create a new project.

Create a new mix.

Click “All Types” and then select “Surface” from the drop-down menu.

For this tutorial, I’m downloading the “Damaged Asphalt” material. Feel free to select something else if that material doesn’t inspire you.

After downloading the material, you’ll see a green checkmark next to it.

Click and drag the material to add it to your mix.

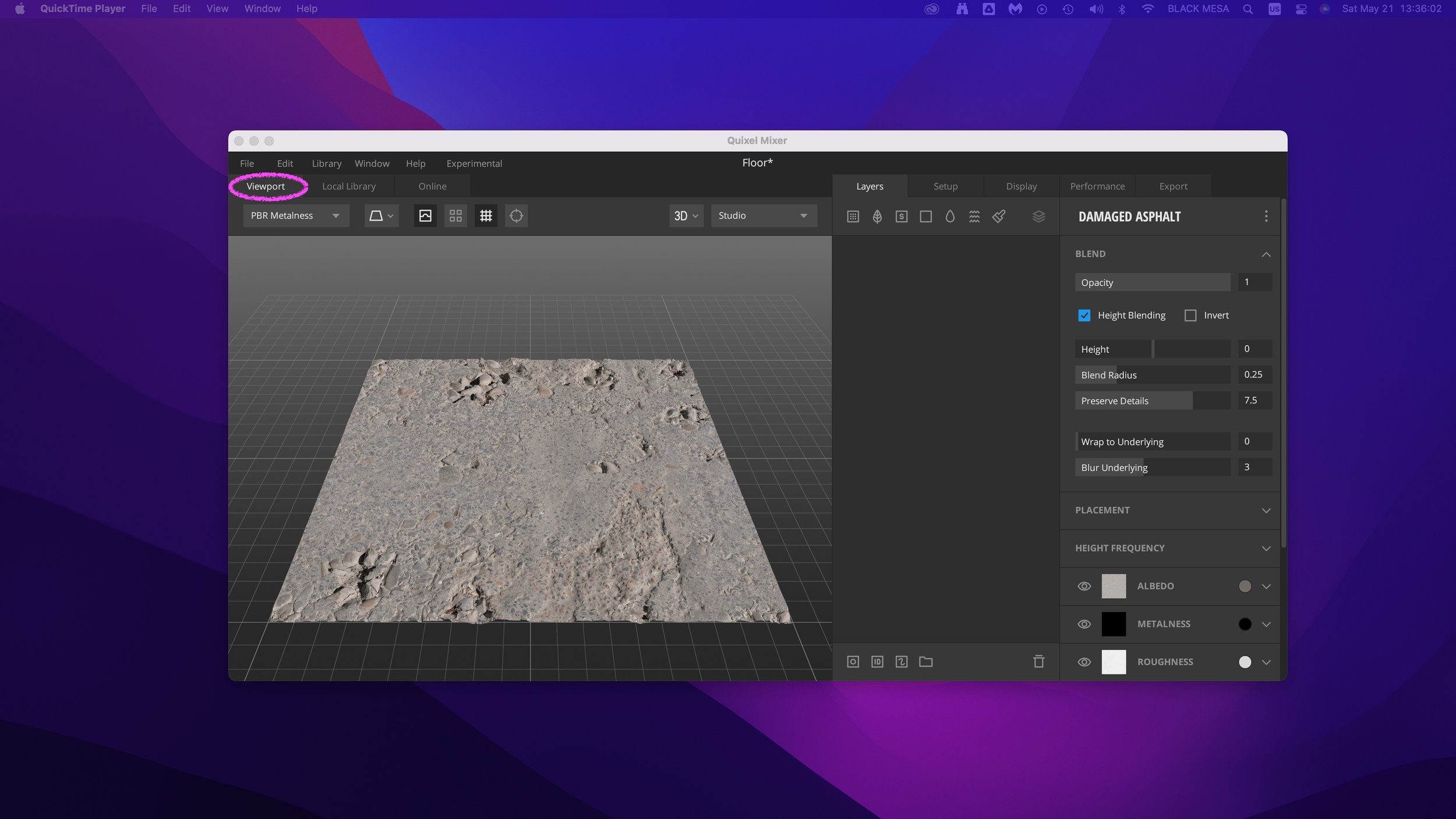

In the viewport, you can view this material as a preview.

There is a reason why this tool is called Quixel Mixer — it allows you to quickly and easily mix several PBRs into a new material. Let’s add another asphalt layer to this mix.

Once again, select a new material (e.g, “Cracked Asphalt”) and download it.

After the download finishes, and you see the confirmed green checkmark, simply drag the new material into your Layers column.

This will add the new material to the existing mix.

Try playing with the sliders on the right. You’ll see the changes to the material mix in realtime in the Viewport.

Once you’ve created your desired appearance, you’re ready to export it.

3. EXPORTING QUIXEL MIXER MATERIAL MAPS

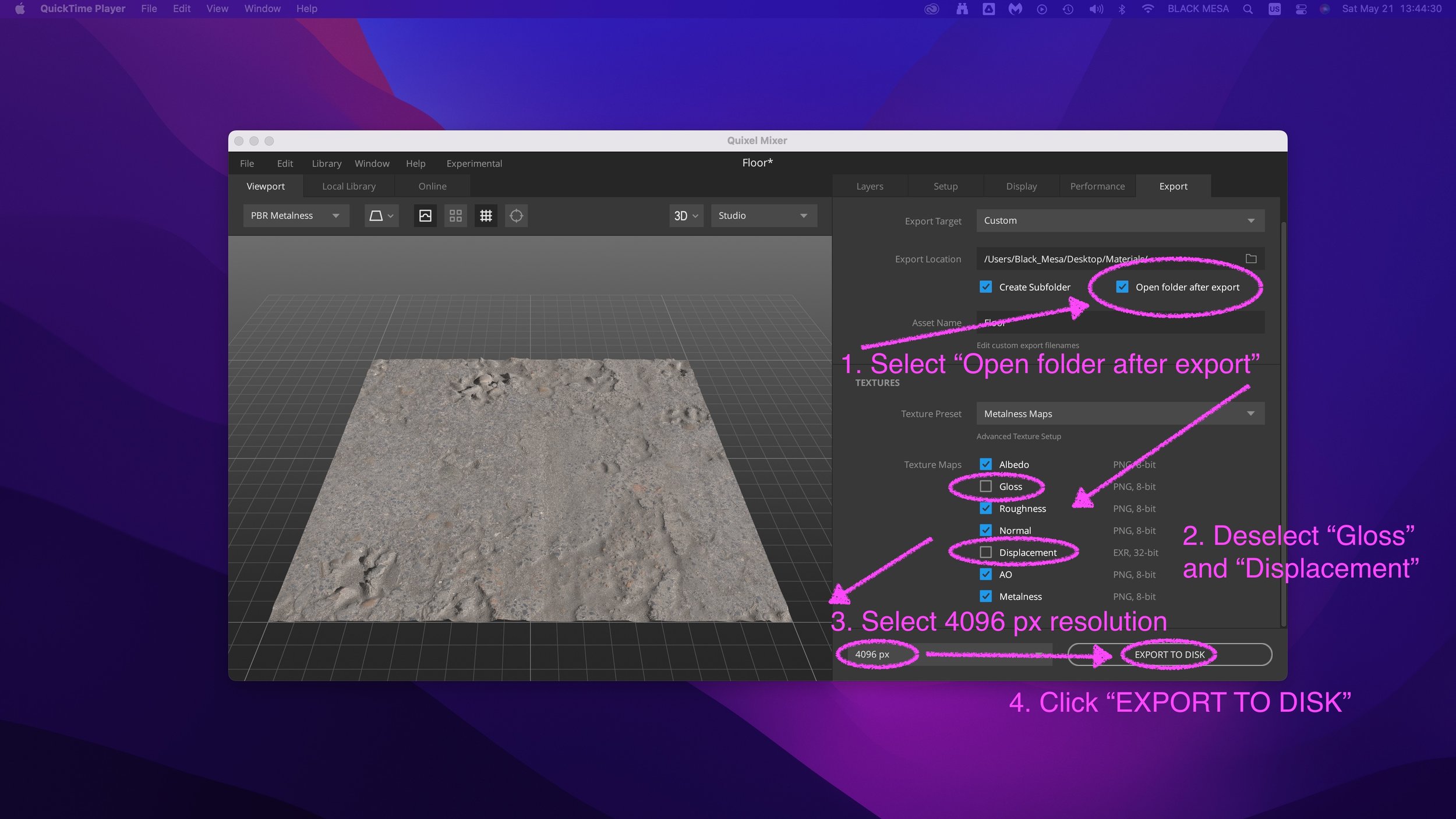

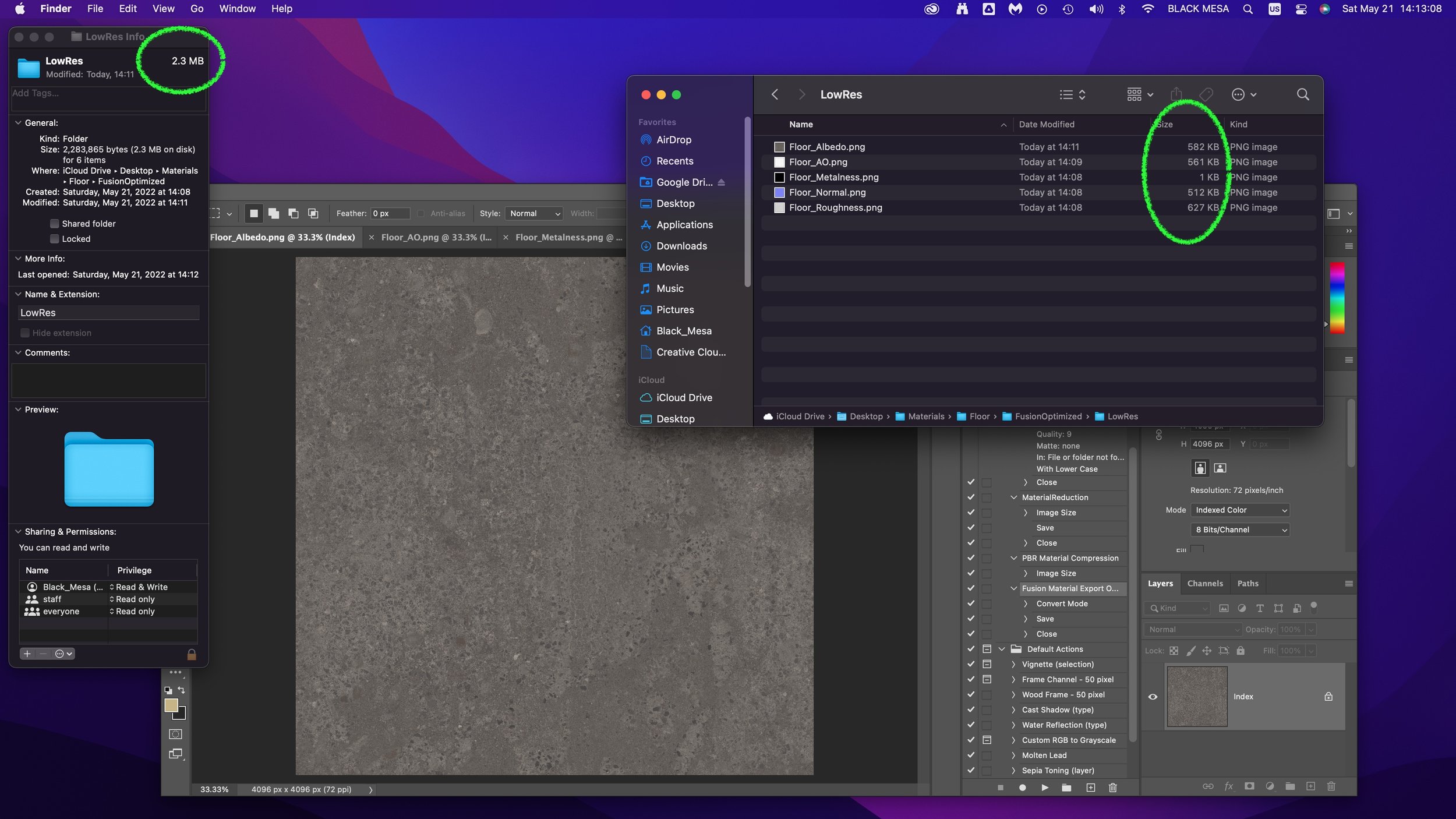

Select “Open folder after export” and then deselect “Gloss” and “Displacement” (we won’t be using these elements with Fusion 360), then select 4096 px from the dropdown menu (to export a 4k resolution mix), and finally — EXPORT TO DISK.

After the export finishes, you’ll see a window (Finder on macOS, Explorer on Windows) with your material maps. Notice that the file sizes are huge. That’s because these are high quality PNG files with an alpha layer. We’ll need to optimize these before bringing them into Fusion 360.

4. OPTIMIZING MATERIAL MAPS FOR USE IN FUSION 360

(optional, but recommended)

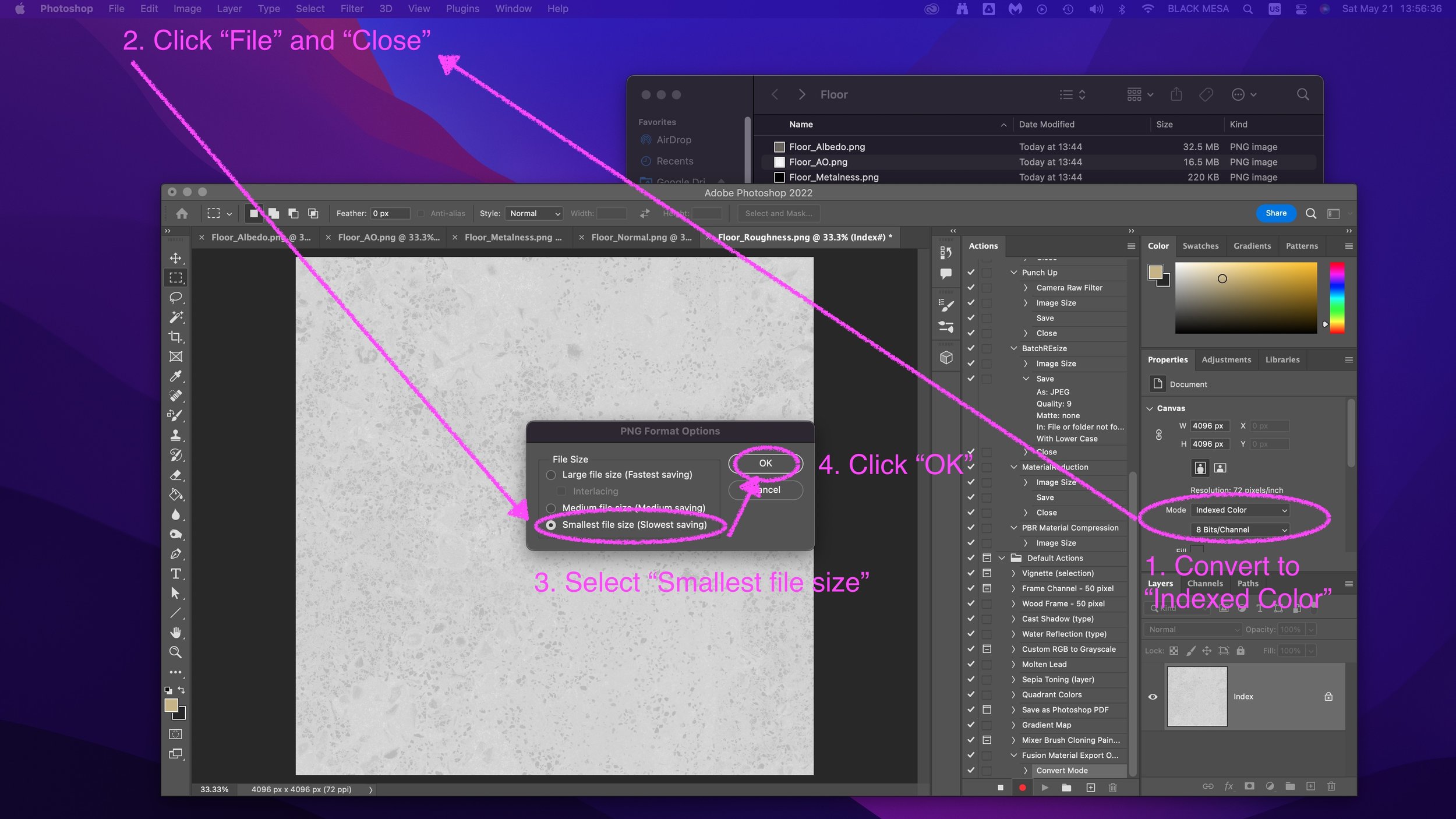

We can do this with Photoshop. Make a new folder for your optimized material files.

Open the material map images and create a new Action (open the “Actions” window and click the “+” sign) and name it something like “Fusion Material Export” and then click “Record” to begin capturing your actions.

From the “Image” menu, click “Mode” and select “Indexed Color.” Set the color convert to “Local Perceptual” and set the color limit to 64. Click OK, and then go to “File” and “Close.” Select “Smallest File Size” and Click OK.

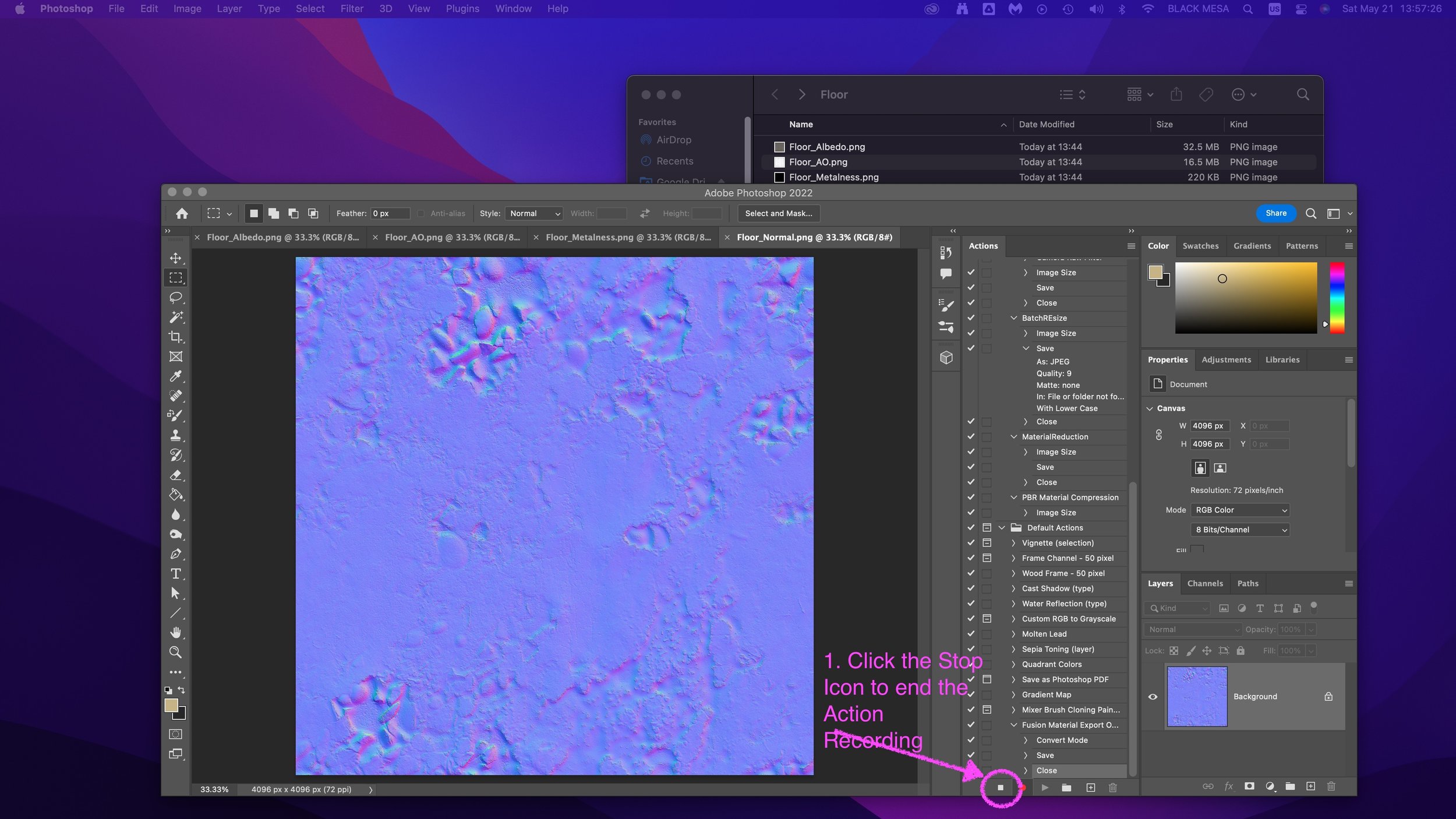

Stop the Recording by clicking the Stop icon (a gray square).

For the next image, we can now repeat the action by clicking play.

As you can see, we shrunk the file over 3x smaller and with virtually no loss to quality. Not bad!

If you want to shrink further, we can also easily export lower resolution images. In this example, I selected 1024x1024 (1K resolution), and reduce the color count to 8-bit (256 color limit). This is a good option, especially if you want to create Augmented Reality versions of your design.

As you can see, the low resolution exports shrink down to less than 3MB. You’ll lose some image quality, but the file size is reduced by over 97%.

In Part 2 of this tutorial, we’ll go over how to bring these materials into Fusion 360.

Scripting in Blender

For folks who use blender to create animated characters for Unreal Engine, you might find the process of creating a rig fairly tedious. You may also discover that some constraints in Blender are causing problems with your exported skeleton in Unreal.

If you need to sanitize your bone constraints, this work can be labor intensive if done manually.

Here’s a simple script that will automate that process. (shoutout to manuDesk for providing this)

import bpy for bone in bpy.context.selected_pose_bones: for c in bone.constraints: if 'Constraint Name' in c.name: bone.constraints.remove(c) # Remove constraint

Replace “Constraint Name” with the name of the constraint you wish to remove from your rig, and then run the script. This could save you hours of work.

Suppose you need to copy constraints between a control rig and a deform rig. CGDrive has provided an entire video tutorial on this process. Below, is the code used in their script. Watch the video for a basic workflow, as there is some preparation necessary for this script to work.

import bpy sel = bpy.context.selected_pose_bones for bone in sel: name = bone.name bpy.context.object.pose.bones[name].constraints["Copy Transforms"].subtarget = name

Summer Internship: mid-July Update

It’s been nearly a full month since my last update, so what have I been working on?

The last few weeks have been incredibly busy. I’ve had several interviews and job application submissions. My gallery and design pages received some helpful updates. I’ve successfully built a standardized data format for a visualization project and introduced some of my fellow interns to the history and purpose of data visualization, and its role in matters of social justice and equality. I’ve also continued to make leaps and bounds on my 3D character work.

I’ve resolved the primary issue that was plaguing me early on in this project: mesh topology.

To be efficient for a game engine, it’s important to resolve mesh geometry with minimal faces. Unreal Engine 5 supports a new level-of-detail automation called “nanite,” which allows 3D modelers to create elements with a virtually unlimited number of faces (geometric detail). This does not seem to work with animated characters, however — if you know a way around this, please send me a message!

A “face,” in this context refers to a plane that has 3 (tris) or more points. Ideally, the geometry should have faces with 4 points (quads). While modern engines and graphics hardware have gotten significantly more advanced since the early days of 3D gaming, it’s nevertheless still important to avoid models with excessive polycounts (the sum total of faces in a model).

The challenge with my model is that it requires several tangent geometries that intersect with a central body. Image if you were modeling Tree Character, and you need to cover the various branches with leaves, blossoms, or pine needles. Blender can easily generate these elements using weighted vertex mapping and particle systems (hair). The difficulty, however, comes from trying to export this data for use in Unreal Engine.

Unreal Engine supports FBX models for import, but FBX models do not support shape keys. By default, Blender applies Particle System modifier as a shape key. You can, however convert particle instances into their own individual body/mesh component in Blender prior to export. This works, Unreal will interpret the model as being several individual bodies with shared coordinates. When exporting this type of arrangement, animation rigs cannot deform the free meshes — your leaves (or whatever) will stay in place while the rest of the tree animates.

You may already be thinking to yourself, “why not just combine the meshes?” And since the beginning of this project, I anticipated that this might be necessary at some point. The challenge then is how to create a combined mesh that is free of visual artifacts. Additionally, the boolean modifier in Blender only allows you to select a single target body to combine (union).

Thankfully, there is a Blender add-on for this last issue: BoolTool.

Suppose you want to combine all of your individual leaf meshes to the main tree body, but you know it would be insanely time consuming to go through the tedious sequence of selecting each leaf, one at a time, applying the modifier, creating a new modifier, and repeating that x700.

You could try writing a script, to automate this process, but you’d need to write it in python, and that would be a can of worms unto itself. You can already imagine the mission creep setting in…

BoolTool makes this process dead simple. You just select the mesh body you want everything to join with (active object), and then select all of those other meshes. Go to the BoolTool menu and select “union.” You may think that Blender has crashed, but give your computer a break. Grab a cup of coffee, meditate, embrace the here-and-now… *BING* [Your mesh combine operation is complete!]

Assuming you created an efficient mesh, primarily out of quad faces, with intersecting meshes containing the same number of vertices, then you should have a single mesh geometry that is ready for rigging and animation.

I’ve tried dozens of other approaches so far, and this method produces the best results all-around: faster, easier to execute, high fidelity, and with the lowest poly count.

I’m still working out the kinks on my current model, and the animation sequences will be a next-level creative challenge for me (I have a lot of inspiration and ambition on this front), but I feel confident in the current direction, and early tests have been quite promising.

I’m also starting to make simple prototypes for augmented reality, and will add some notes about that later this month.

Week 3 and 4 Update: 3D content migration woes

This update is coming in late, as I’ve been trying to come up with some way to explain the difficulties I’m facing with this project, while also respecting the privacy and IP. I’m leaning heavy on metaphor, but…

Imagine there are three people sitting at a bar. One of them speaks Dutch, English, and German (but only okay German, not great). Another speaks Spanish, some English (okay-ish grammar, few nouns), and fluent German. The last person at the table is a little bit unusual. They’re a rapper from Japan, they speak fluent Japanese and a little English. As a child they were an exchange student in Germany, but they’ve forgotten most of what they learned. The rapper also insists on speaking-as-quickly-as-their-mouth-can-run. They never slow down. Speed is everything.

They all have some overlap, however imperfect, in their spoken languages, but none can understand each other perfectly. This is what it feels like to develop assets for a still-in-beta realtime engine, while leveraging a parametric modeler, an open source 3D creator tool, and adhering to standards from a professional VFX and CGI-specific 3D tool.

This week my primary focus has been on getting Blender and Unreal Engine to talk to each other. Unreal prefers FBX file format (Autodesk Maya Native). Blender can export most data in this format, but there are a few catches:

No shape keys

Subdivision data is limited to a single iteration

Dynamic animation can only be exported with baked physics, and is limited to vertices and face transformations (kind of, depending on what you’re doing).

Additionally, Unreal doesn’t quite understand Blender’s material system. It will still export textures and UV map data, but you’ll need to build the material blueprint to recreate whatever you have in Blender. It is far from being a 1:1 exchange.

There are also a many weird quirks:

In Blender, the default units are meters. In Unreal, the default unit is centimeters. Before exporting from Blender, you need to set Unit Scale to 0.01. If you switch units in Blender to centimeters and leave the Unit Scale at the default of 1.0, then you’ll experience strange anomalies for things like collider bodies, skeleton mesh, etc..

In naming the rigging elements (IK skeletons, etc.), you DO NOT name any bones “root,” because Unreal will assume a hierarchy which may differ from your hierarchy (parenting) in Blender. However, you may rename the Armature container to “root” to conform to Unreal’s hierarchy.

Lastly, the only rigging data that reliably translates between Blender and Unreal Engine are deform bones and baked animations/transformations.

I’ve reached a stumbling block with my current 3D character. I can rig the character to animate, and even output that data in a manner which Unreal Engine can interpret. This comes at the expense of a vital visual element that was procedurally generated. What comes next is a difficult choice:

I can either integrate procedurally generated elements into a single mesh geometry (potentially compromising some rendering performance and visual fidelity) but without having to rework existing animation and rigging, or I can attempt to recreate the procedural mesh instancing I developed inside Blender but natively within Unreal. The former will be labor intensive, but I understand the tools well enough to work consistently toward a known output. The latter involves many unknowns, but I will also gain a deeper understanding of workflows within Unreal Engine. I’m attracted to this latter option, but I don’t know if it is best for the client and their expectations.

Week 2: Summer Internship

This week I continued working on 3D asset creation. My basic approach so far has been to start with a simplified geometry from Fusion 360, then export that design as an .FBX (Autodesk Maya file format), import the FBX to Blender for UV mapping, material, and motion rigging. There’s probably a more streamline way to generate this content, but from a feasibility standpoint, this approach allows me to be flexible and to use different tools for discrete tasks. This week I will be importing these combined assets into Unreal Engine.

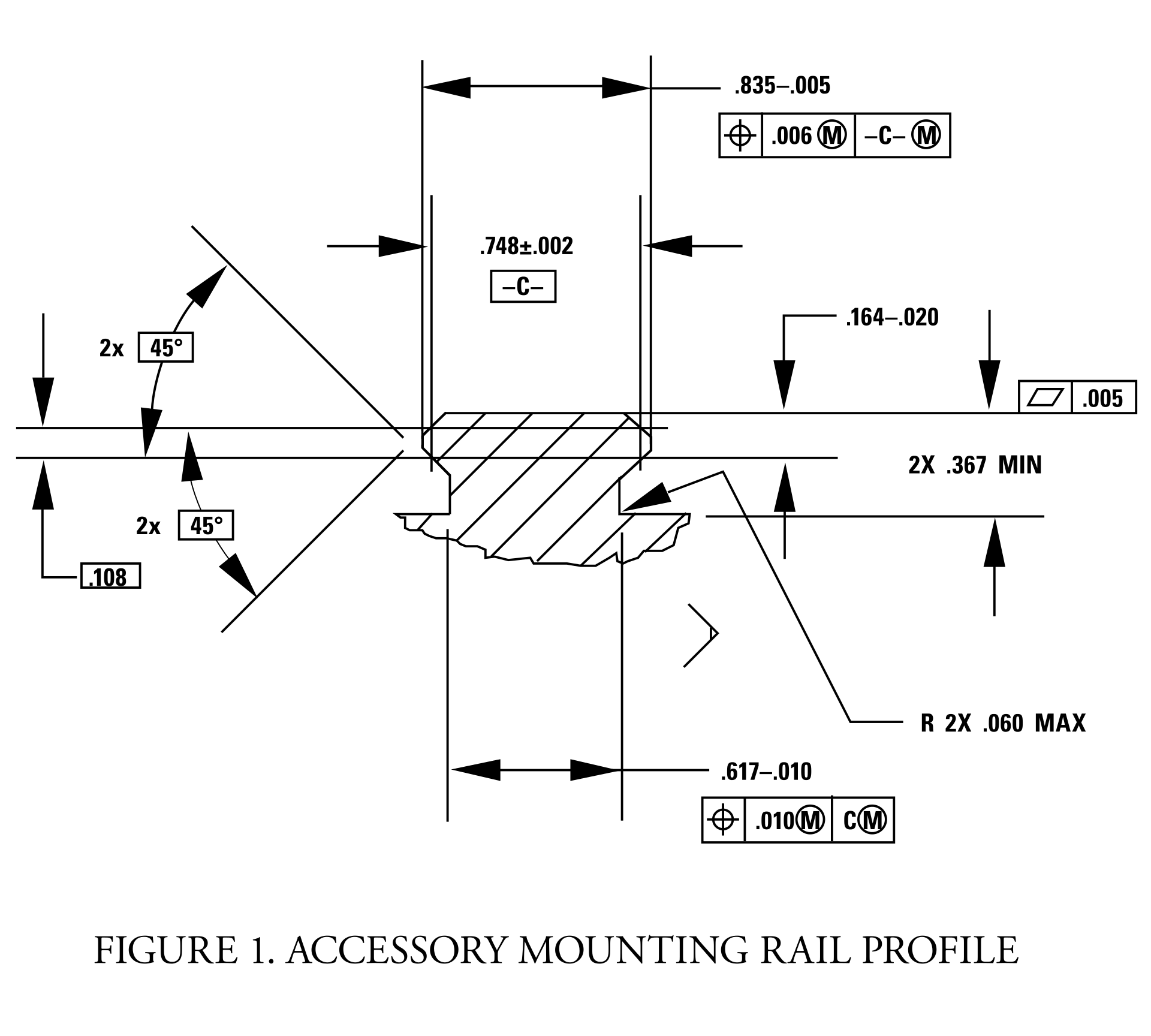

This week was also my final week for the term at PCC, where I have enrolled in their online course for Advanced Fusion 360. I’ve been working on a group project, and designing assemblies for use in a solar projector system. The design is based on COTS (commercial off-the-self parts), which required me to draft profiles to meet engineering specifications.

Picatinny rail specification downloaded from wiki-commons.

The final deliverables are due this coming Saturday, and there is still a good bit of work to be done before we get graded on this project. Nevertheless, I am very pleased with the current state of things. I’ve been using Quixel Mixer to produce more realistic rendering material than the library included with Fusion 360. I say, “more” realistic because Fusion 360 already has some excellent materials. Take a look at this rendering of a Bushnell 10x42 monocle (one of the components in this project):

I haven’t yet added any details, but as you can see, the rubberized exterior and textured plastic hardware are fairly convincing. Now, take a look at the mounting hardware rendered with Quixel textures:

An important component in photorealism is the inclusion of flaws. Real life objects are never perfectly clean, perfectly smooth, or with perfect edges. Surface defects, dirt, scratches, and optical effects play an important role in tricking the eye into believing a rendering. With Quixel Mixer, it is possible to quickly generate customized materials. While this product is intended for use with Unreal Engine and other real-time applications, it does an amazing job when coupled with a physical based renderer.

Picatinny rail set with hardware and bracket.

I’m excited to see what can be done with these materials in a real-time engine, especially given the advanced features of Unreal Engine 5. Fusion 360’s rendering is CPU driven, whereas Unreal is GPU accelerated. With both Nvidia and AMD now selling GPUs with built-in raytracing support, it won’t be long before we see applications that offer simultaneous photorealism rendering within modeling workflows.

Additionally, GPUs also work extremely well as massively parallel computing units, ideal for physical simulations. This opens up all kinds of possibilities for real-time simulated stress testing and destructive testing. It wasn’t that long ago that that ASCI Red was the pinnacle of physical simulation via supercomputer. Today, comparable systems can be purchased for less than $2,000.

Of course, this price assumes you can buy the hardware retail. The current chip shortage has inflated prices more than 200% above MSRP. Fortunately, with crypto markets in decline and businesses reopening as vaccination rates exceed 50% in some regions, there are rays of hope for raytracing-capable hardware being in hand soon.

Week 2 mini-update: outputting video from Unreal Engine 5

Usually, I only update my blog on Sunday nights — I like to reflect after the week is done and I’ve had a full dose of daylight to consider what matters.

I’m breaking that rule because I’ve learned something that I think might be useful to others. Last week, Epic Games released a preview of Unreal Engine 5. If you haven’t looked at this tech, it’s worth your attention. We’re rapidly approaching a point where individual creatives (equipped with modern hardware) will be capable of producing photorealistic graphics in realtime. This is due to a convergence of procedurally-generated content, open libraries providing physically based materials, templates, and raytracing technology.

I’m a huge advocate for 3D technology. Being able to show something instead of telling it is huge. Consider all of the times in your life that you had an idea, something that you could clearly, vividly see inside your mind, but you felt was difficult or impossible to describe? What if you had the tools to quickly take your idea and represent it visually, with no limits to fidelity or realism? These tools exist, and they are getting better every day. Additionally, many of these tools are free and have a wealth of community-led learning and support.

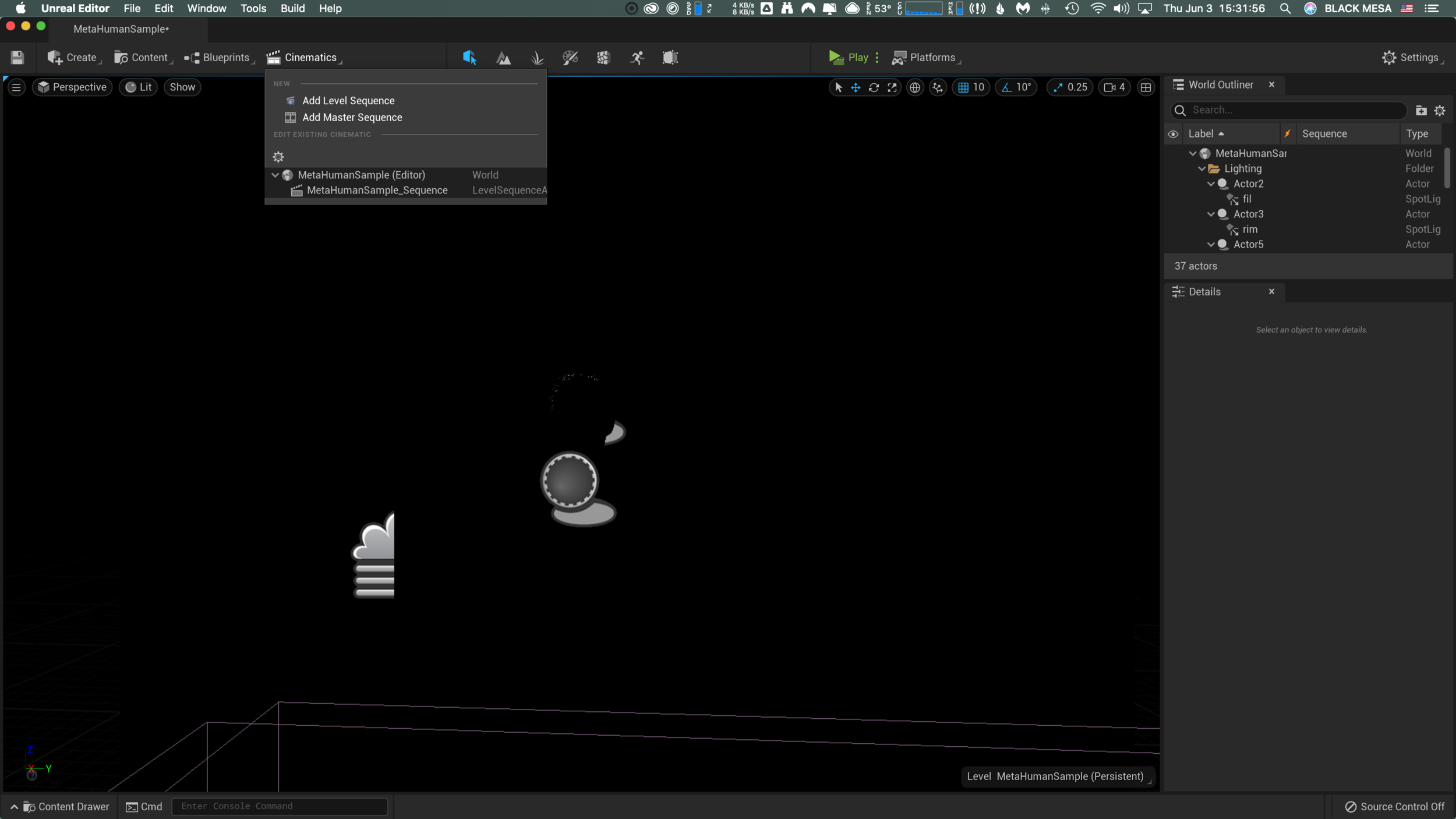

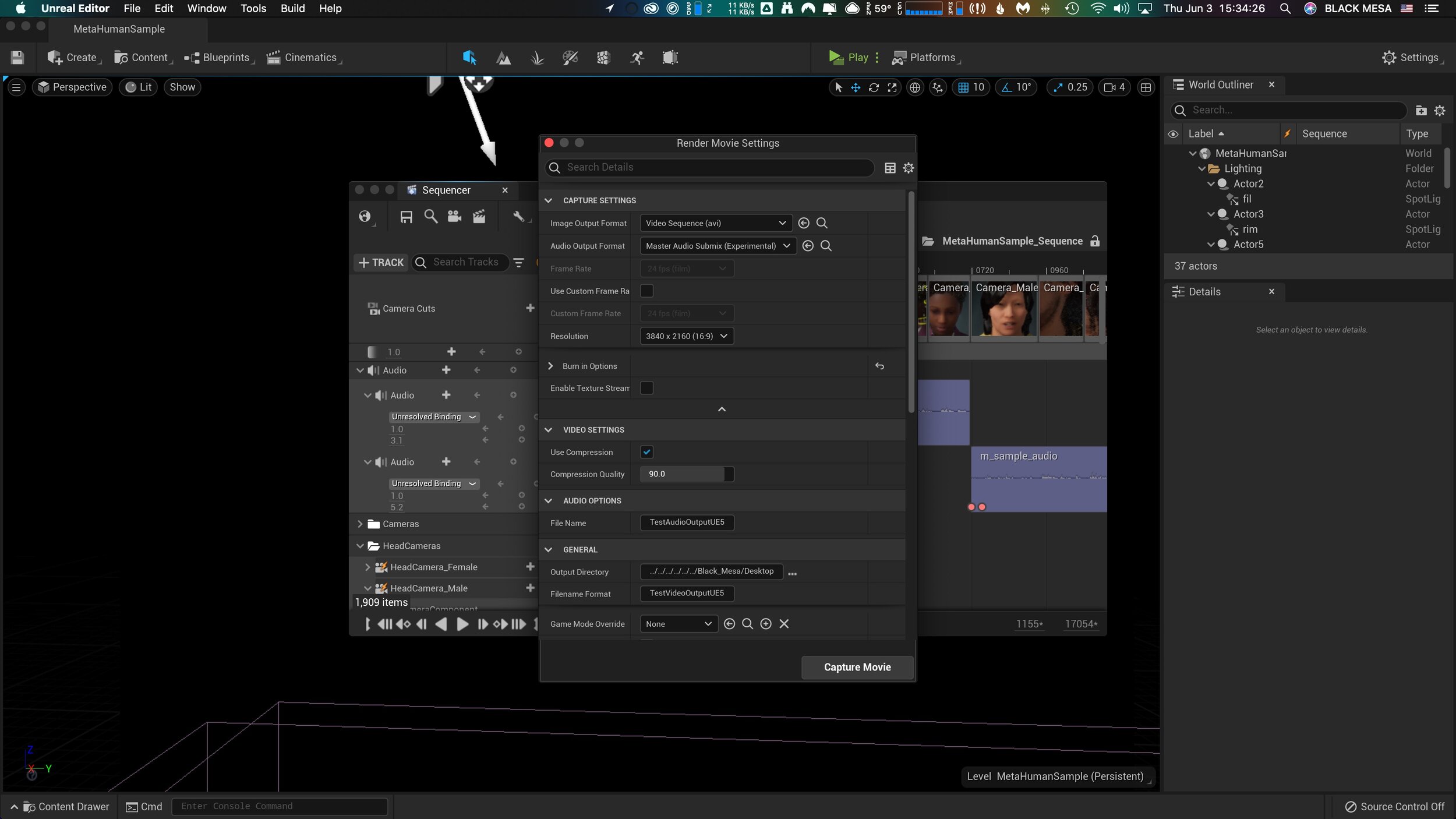

Today I was asked to come up with a way to capture video from unreal, and I discovered a great way to do it in Unreal 5 Engine. Here’s how!

Geigertron’s Very Practical Guide to Exporting Video From Unreal Engine 5

For this example, I’m using a sample project based on MetaHuman Creator, you can download UE5 preview, it’s all free!

1) After opening the sample project, click on the clapperboard icon (“Cinematics”).

2) In this example, there’s already a sequence (MetaHumanSample_Sequence), so we’ll select that. To learn more about creating a cinematic sequence, click here.

3) Within the sequencer window, there is another clapperboard icon on the top row menu. Click this to open the “Render Movie Settings” window.

4) From the Render Movie Settings window, you can select, output format for image and audio, resolution, compression, and output file location. After setting up, click the “Capture Movie” button on the lower right and wait for UE5 to finish rendering and saving your output file.

This operation completed in near realtime and the output is pristine. If your scene contains audio, then you’ll need to merge/combine it with the video output. I did this in After Effects, but other programs would probably work equally well.

Week 11: qualitative evaluation of concepts

Our online survey is now underway, and while this virtual format isn’t exactly like so-called “speed dating,” we are hoping that it will be able to serve a similar purpose for our research. Creating a meaningful online experience for our participants was a tall order, especially with such tight constrains. There are many risks when created a fully automated and hands-off system. Not being there to clarify or to address questions or concerns in realtime was something we needed to accept as a trade-off. In exchange, we have a dozen unique participants ranging from 2 years to 27 years of experience, and from various districts around the country.

So far, the majority of responses have been from an online community of English teachers, so our data is skewed toward this perspective. On the plus side, English teachers provide excellent written responses. To avoid the pitfalls of statistics and quantitative analysis, we designed an online survey with open text fields, and we framed our questions around hypothetical scenarios. This would provide us with reflection and insights into how teachers imagine these concepts for themselves, and what perceived deficiencies come up for them in thinking about these systems in action.

Screenshot of survey responses, exported into a CSV file

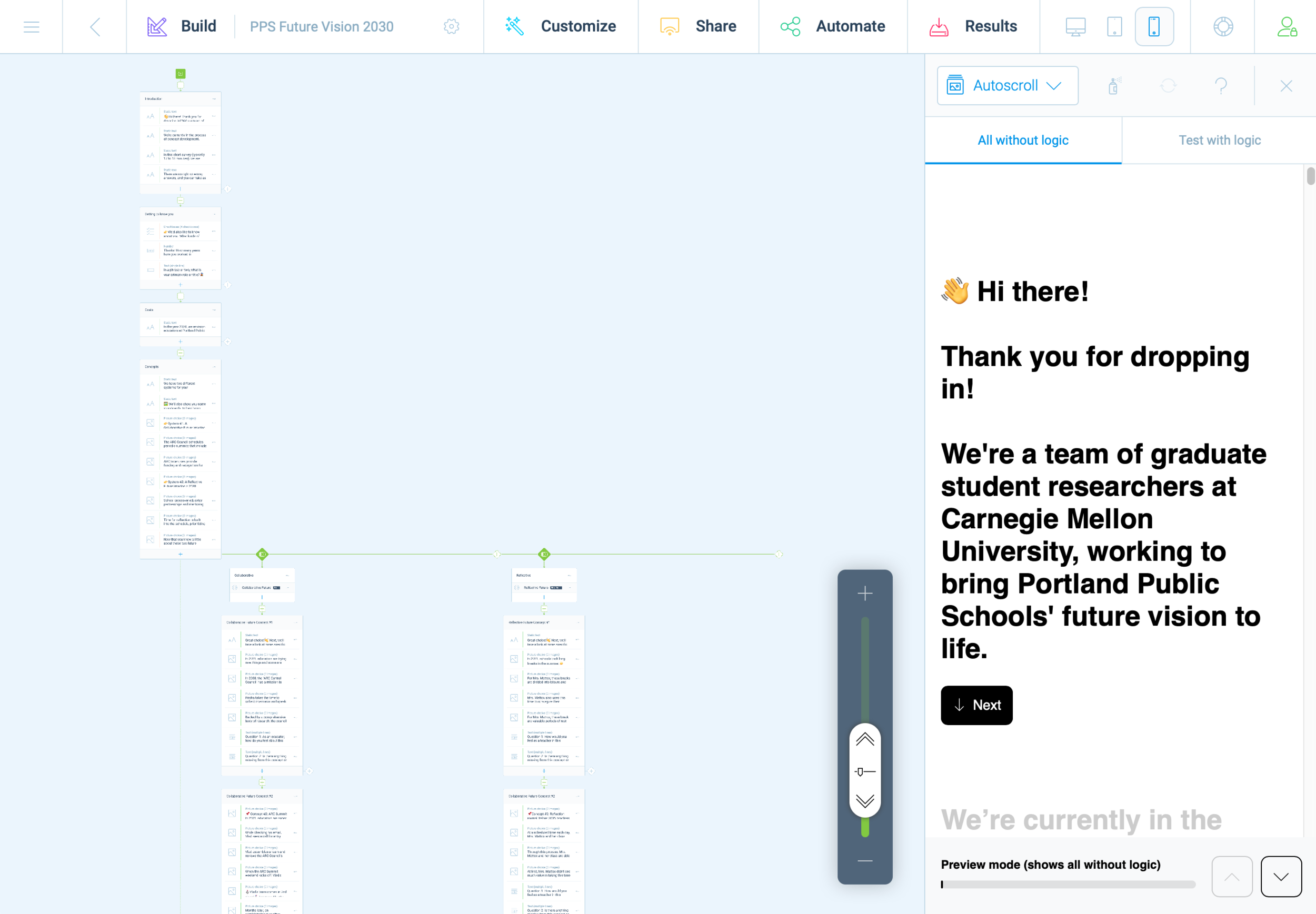

The last 24 hours in particular have been very exciting, as we finally gained access to online educator communities. This process has been slower than wanted, but we first needed to fully develop our survey before we could deploy it. This process in and of itself was a design challenge.

Last weekend, we decided to use the Tripetto platform. This gave us the same logic capabilities as TypeForm, but without any additional costs. It became clear almost immediately that we would need to prototype and refine our survey before receiving teacher feedback, and this effort was highly collaborative.

With multiple teammates, it was possible to divide this task into several areas that could be worked on independently and in parallel. We first decided on a basic structure and strategized the division of labor. Carol worked on the text/content based on a logic diagram we crafted together. While Carol crafted this outline, I created a mockup version in Tripetto. Without access to finalized concept sketches, I took some poetic license.

Screenshot of 2nd iteration prototype survey

As Carol and I worked together to refine the text copy, Cat and Chris worked together to create images and descriptive text for our participants. Once all of this content was ready for Tripetto, we began doing test runs, trying to break the experiences. This revealed some quirks with Tripetto’s logic functions and some of the less apparent features.

There are a few honorable mentions; Tripetto has a lot of subtle features that we often take for granted in other online experiences. Things like placeholder text, required fields, multiple choice radio buttons, checkboxes, multi and single-line text boxes. During the refinement phase, these features became essential and it was exciting to discover them—only after they were deemed essential enough to be worth the effort.

The minimalist UI of Tripetto made these features less evident, but not too hard to locate or execute. From start to finish, this experience felt a little shaky and uncertain but viable.

I often found myself this week grinding away on the platform, slipping into a state of mind that Mihaly Csikszentmihalyi describes as “flow.” In other words, creating a survey on Tripetto wasn’t easy to use, but just challenging enough to keep me interested in working through obstacles. I think that what helped support this effort the most was building models within platforms where everyone on the team is already fluent. For us, this was primarily Miro, Google Docs, and Sheets.

Screenshot of two representations of the survey, carried across platforms (Miro and Sheets)

First impressions matter, and we didn’t want to put out anything that wasn’t necessarily a work in progress. Even with this in mind, we did have a few last minute tweaks as we adapted our survey to maximize pulling power with other social media environments.

Arnold Wasserman’s desk critique was incredibly valuable for our team, as his feedback helped us to consider the importance of our survey as a communication tool. He recommended that we make the implicit, explicit, to directly communicate to our participants what we expected and why. We were encouraged to explain what questions we were asking, and to share this openly. This kind of transparency can be tedious, especially in text-based systems. I took this to task and simplified statements throughout the entire experience.

This gave the survey a personality all its own; like a casual and curious friend, we asked about specifics but with little pressure. We kept things open.

Open data cannot be calculated, it must be evaluated for patterns. Next week will be a scramble to synthesize patterns and new insights as we work to finalize system concepts into well defined parameters. We hope that through this process we will also identify opportunities to produce relevant and compelling artifacts (our final output/deliverable).

It still feels like a risk to be so far into a process and to still not have a clear idea of what it is we are making. We instead draw our assurances from what we have already made: an index of relevant articles, interview notes, countless diagrams and visual representations of high-level abstract concepts and maps at almost every level of visual fidelity imaginable, hundreds of presentation slides, dozens of pages of reflective text, and months worth of slack messages, shared links, and drafted emails. We created interactive digital workshop spaces and protocols for our participants, and archives with 256-bit encryption.

When looking at the collective volume of effort from this team, it’s difficult to imagine that we wouldn’t make something meaningful in the end. Is that too optimistic? Ask me in a month.

Kinetic-friendly spoon project Mega Post

That’s a wrap! It’s certainly been an interesting semester, but now I am ready to put it behind me. Reflecting on the spoon project, I have some final thoughts and observations. First, I want to thank the fine folks at CMU School of Design. From the amazing and hardworking faculty and graduate student cohort, I have had nothing less than inspiration and encouragement throughout this entire process, despite the obvious challenges of working remotely.

Rendering of sixth and final (?) spoon design. I pulled the kitchen design (Pierre Gilles) and bowl (Damogran Labs) from GrabCad.com. The spoon and coffee mug are mine.

This project was divided into two parts: the first part focused on exploring different ways of prototyping and making. This was described to me as an informal way of A/B Testing for methods. The second part involved the deliberate iteration of prototypes through user testing — a challenge in the context of a global pandemic and social distancing. To make the most meaningful design choices possible given limited resources, I decided to leverage the power of physical simulation to supplement the making of physical prototypes.

There are a variety of 3D software tools that offer some degree of physical simulation. For this project, I selected Maxon Cinema 4D R20 (Educational License) and Blender as my two ways of making. I chose these because I already am familiar with Cinema 4D and understand know how to manage a workflow in that context, because Blender is open source and free for anyone to use, and both programs work under MacOS and Windows environments (my rendering workstation is a Hackintosh with multiple operating systems, which grants the flexibility to overcome certain technical limitations). My initial experiments with Cinema 4D were… not great.

As you can see, there are “physics” happening here, but they are not anything close to the physics of the real world. This is not “real world” physics, this is Asshole Physics:

Zachary "Spokker Jones" Gutierrez and I came up with the term "Asshole Physics" when we were discussing the game and the physics models it employed. Basically there's a lot of crap you can knock over and kick around, including dead bodies, buckets, cans, and little sections of drywall which are standing around in the middle of rooms for no obvious reason. Zachary casually mentioned, "I have made it a point to knock over every fucking thing in that game. I am living out my fantasies of being a giant asshole," and I responded by stealing his "asshole" comment and claiming that I made it up. Thus "Asshole Physics" was born.

Without more sophisticated plugins to simulate fluid, Cinema 4D R20 is only “out of the box” capable of non-newtonian semisolids. I can make stuff bump around and “squish.” I can have a 3D character micturating on the side of a building. I can create the appearance and illusion of something like a fluid, but with such restrictions, I could not realistically evaluate my spoon designs. I explored my options and found that Next Limit’s RealFlow plugin would meet my basic needs. Best of all, they offer a free 30-day trial! My initial excitement quickly waned after the plugin failed to install and activate on my system…

(This email chain is long and covers a week of back and forth with customer service. I am including the entire conversation as a way to recreate my experience. While this may not directly relate to the scope of this project, I still believe that there is value in documenting the unexpected problems that crop up when trying to do something new.)

It took a week to finally get everything sorted with the demo. During that time, I began to explore option B: Blender.

Blender is a free, powerful, open source 3D creation tool. Best of all, it includes the mantaflow fluid simulation engine (since version 2.8). I have worked with Cinema 4D on other projects, and have become fairly comfortable with the interface. Given my experience with Fusion 360, Inventor, and C4D, I knew that I would need to overcome a learning curve before I could use this software to meet my needs for this project. Fortunately, I was able to find a spectacular tutorial series for beginners.

If you want to read more about my experience with the tutorial, click here.

This tutorial was ideal because it involved exercises that helped me learn how to use the interface, and covered several different workflows. I was really impressed with Blender’s node-based material system and procedural textures. You can work strictly with parametric modeling, or you can discretely modify mesh geometry to create highly organic and imperfect forms. I’m excited to work with Blender on future projects. It’s a very exciting time to be working in 3D.

While working through these tutorials, I began sketching and working in Fusion 360 to craft my first spoon designs for part 2 of this project. You can read more about this experience here.

Takeaways from Part 1

I really appreciated the responsiveness from the team at Next Limit. Clearly there are problems with the software’s implementation of their product’s copy protection. This is an all-too-common problem in the world of software. Programmers gotta eat just like everybody else, and we certainly should make sure that the talented and hardworking folks behind the code are able to put food on their table at the end of the day. Piracy can deprive a small business of the necessary revenue to keep the lights on, so I am absolutely sympathetic to this reality and what risks are involved when you release your software for demo purposes. Getting people to pay for something that they can easily get for free is a challenging proposition. At the same time, you cannot realistically expect to get customers to pay for software if they cannot try it first. Ultimately, this one week of back and forth with customer support was a critical loss. I never completed a side-by-side comparison of fluid simulations. While I did eventually succeed at installing and using RealFlow to do fluid simulations, (and was honestly impressed with how easy it was) I did not, however, have enough time to setup a comparable simulation to evaluate spoon designs. My trial expired about a week ago, and I see this aspect of the project as a lost opportunity. If Next Limit applied similar licensing practices as Maxon (verify it through .edu email address), they could offer an educational package of their RealFlow plugin.

Blender really came through for me. The learning curve was aggressive, but not impossible. While I found mantaflow to be a respectable and entirely capable fluid simulator, it was not without its own share of issues. I spent a lot of time making granular tweaks to improve the fidelity of my simulations, while also using the observations from my simulations to inform design decisions for my spoons in part 2 of this project.

Part 2: Design Iterations Based on User Testing

While this project required user testing and design iterations based on feedback, I decided to limit the user evaluations to address handle shape and the spoon’s overall dimensions. This was not an arbitrary decision or an excuse to focus on physical simulation of fluid dynamics (with user testing as an aside). No, this decision was based on the nature of the course from which it was assigned: Prototyping for Interaction Design. This semester I have have been focusing on designing for interaction (arguably, all designers do, at some point in their process, focus on this aspect). When thinking about the tools we use (to eat food) as a system, it is important to consider the touchpoints involved. The handle of a spoon is a non-trivial component. It can take on many forms, and naturally includes affordances. How someone holds a spoon, and how easy it is for them to use it are central to the evaluation of the design.

The iterations of design were highly generative in nature, inspired by both user evaluations and physical simulations, I maintained a homeomorphic continuity: treating the initial shape as an elastic form to be molded and reshaped to maximize performance. Knowing how a concave shape might be optimized to perform under rapid movement — I wanted to create something that would be useful, and the physical simulation of fluids facilitated a means of evaluation — is only one aspect of a more complicated interaction, and this test alone could not fully address human needs. When physical form is designed and directed to improve user interaction (and physical properties are given equal consideration), it is possible to create a truly useful tool. I realize that this is a very technical description, but it is easier to understand when properly visualized. I have rendered a compilation sequence to show how this spoon shape evolved to its final(?) form (I am still considering a physical prototyping stage for this project over the summer).

A sequence of fluid dynamics tests designed to evaluate fluid retention of concave forms. Carnegie Mellon University, School of Design, Prototyping for Interaction, Spring 2020.

Toward the latter half of this sequence, you will notice a change in colors (for both the liquids and spoons). I decided to differentiate the final rendering sequences as these were based on user evaluations. The colors chose for these final sequences are based on the color tags used for the user test:

These printouts are derived from DXF vector images exported from Fusion 360. The designs shown are oldest (top) to newest (bottom). The fifth design (blue) is rendered with a blue body and green liquid.

I printed and mailed the paper prototype to a potential user suffering from ongoing hand tremors (my partner’s mother). I sent this without written instructions. Instead, I only provided different color tags to facilitate feedback. My user let me know that the red spoon handle was in the “Goldilocks” zone in terms of size and shape: not too big, not too small, not too curvy, not too straight. Using this feedback I constructed the sixth and final (?) form — see the first image of this post.

The user test included a direct side-by-side comparison with existing dinnerware.

Before developing these simplified paper prototypes, I also experimented with ways of making more three-dimensional forms that could be sent in the mail. While this novel approach showed some potential, I was concerned with how user error might complicate or (even worse) bias feedback. Still, these paper prototypes helped me to better understand and interpret the scale of my 3D models.

Final Thoughts

This project still feels somewhat incomplete. Perhaps this is because the generative design process itself can always demand further iteration, or maybe it is because I have not yet created a physical prototype that can actually be tested as an eating instrument. Maybe it is only because there were still a few “rogue droplets” (grrrrrr) that I simply could not keep contained with the completion of my sixth iteration. Whatever the net effect might be from these various shortcomings, I am pleased with the learning opportunities that were presented throughout this exploration of design.

Were I to continue with this process, the next steps would be to 3D print the latest shape using a food-safe material (there are a few third-party vendors that offer this service). I would then ship that latest design for further user evaluation. I believe that there are still many additional iterations necessary before I could defend having created something that satisfies the criteria I set out with this project (i.e., a spoon that overcomes the challenges of involuntary muscle movements and essential tremors).

If I were to collaborate with others, I would also want to evaluate the ecological and economic impact of such a device. How might we go about manufacturing to appropriate scale? How might additional user tests with a wider audience influence the existing form? There remains many unanswered questions and a newfound respect for the power of generative design.

Bugs in the Blender

I have continued to have luck exploring the Fluid simulations in Blender, but this process has not been without its quirks. I recently encountered a strange issue related to Particle Radius settings

Particle Radius

The radius of one liquid particle in grid cells units. This value describes how much area is covered by a particle and thus determines how much area around it can be considered as liquid. A greater radius will let particles cover more area. This will result in more grids cell being tagged as liquid instead of just being empty.

Whenever the simulation appears to leak or gain volume in an undesired, non physically accurate way it is a good idea to adjust this value. That is, when liquid seems to disappear this value needs to be increased. The inverse applies when too much liquid is being produced.

What does this look like in practice? My most recent simulation actually seems to produce fluid as the scene progresses.

Nevertheless, I was able to gain critical insights into this form and will continue to iterate new designs. This is being done in conjunction with paper prototyping. These forms are less sophisticated, but still provide valuable information about how users will experience and interact with this flatware.

Prototyping – Part 2

Working with Blender has continued to go well.

I have also been looking at some of the existing solutions in this space:

KFS Easy Eat

http://www.eating-help.com

Liftware, by verily

https://www.liftware.com

EliSpoon

https://elispoon.com

Ornamin - Supportive Cutlery (Parkinson’s)

https://www.ornamin.co.uk/shop/cutlery-set?number=SW24

S’up Spoon

https://www.youtube.com/watch?v=C8nNlWw6KbA

Apex Medicine Spoon

https://www.riteaid.com/shop/apex-medicine-spoon-0233706

I have been sketching and studying these forms in consideration for my own designs.

Some shapes are unappealing because of their associations. These still deserve consideration, as they function well in this space.

This week I will begin iterating designs in Fusion 360. Hopefully, I will finally be able to make use of my RealFlow trial license. I’m curious to see how the “out of the box” settings function with these geometries.

Fluid Simulation in Blender

“Throw off your fears let your heart beat freely at the sign that a new time is born.” — Minnie Riperton

I’ve completed my workflow design for fluid dynamics testing in Blender. Here’s a proof of concept:

Now I just need to figure out RealFlow in C4D…

Blender: Time To Make The Donuts

I’m very happy with the results of my first project in Blender.

White Frosting

Classic Pink

And Nekkid!

After struggling with Cinema 4D, I decided to use a plugin rather than trying to cludge together some handcrafted fluid simulation. Unfortunately, I ran into a licensing problem with Next Limit’s RealFlow plugin. I’ve been emailing back and forth all week, and they finally got a fix for me — SUCCESS! While I waited to sort that out, I decided to give Blender a try. I began following this tutorial, but quickly found myself getting lost. I needed to become more familiar with the software and interface. I decided to follow a beginner’s tutorial to get my feet wet. I choose this particular video series because it employs procedurally generated elements, and covers all the basic modeling commands, node handling, and the complete stack of scene construction. And also: donuts are delicious. The world is terrifying, and we could all use something sweet.

I am honestly shocked that Blender is a free program. Many 3D programs are horrifically expensive; without student licensing, I could never afford to touch most of the 3D tools I’ve been learning over the years. Yet Blender seems to be very capable as a 3D program. I have only scratched the surface, but it is very impressive. Now that I have familiarized myself with Blender, and have a working fluid simulator plugin for C4D, I am in good shape to begin A/B testing.

Prototyping Cutlery

For one of my final projects this semester, I’m interested in creating a set of eating tools that help account for involuntary muscle movements (e.g., Parkinson's disease or tremors) and other mobility difficulties that limit the enjoyment and consumption of foods; I'm interested in exploring simple solid shapes, living hinges, and assembly forms derived from explicit advantages of additive manufacturing techniques.

[I want to make a really nifty spoon.]

Fabricating physical prototypes will be a challenge (…)

Seriously: fuck you, COVID-19.

This is not the only challenge, however. Finding access to food-safe materials, conducting a series of user tests, iterating forms, and self-directed research will also require creative workarounds to overcome the limitations of working while under “shelter-in-place” orders due to global pandemic.

I have decided to go 100% digital. instead of building various forms and testing their ability to hold fluids under rapid motion, I will instead conduct a series of simulated physics tests to evaluate forms. For the first part of this project, I am required to conduct an A/B test or evaluation. I have decided to conduct dual testing using different 3D programs.

Method 1:

Maxon Cinema 4D includes a variety of physical simulation abilities—including particles and fluid dynamics. I intend to leverage this software’s capacity to test various designs and forms. Tests will be designed to evaluate fluid retention under repeated multi-axial movements. Cutlery designs will be tested against traditional forms (e.g., standard soup spoons).

Method 2:

Blender is a free, open source platform for creating 3D models, rendering, animation, and more. Among the built-in features is a fluid simulator. Combined with rigid body and gravity physics, it should be possible to evaluate a variety of spoon shapes and (potentially) even different forms of cutlery.

Considerations:

By using two different simulations, it should be possible to more thoroughly evaluate a design’s fluid retention abilities.

Timeline:

Week 1 — Cinema 4D Workflow: Since I am already familiar with Cinema 4D, I have decided to begin this project by constructing my first simulation with this software. I will use Fusion 360 to generate original spoon designs, as well as a “traditional” spoon shape to compare performance.

Week 2 — Blender Workflow: Using the assets from week 1, I will spend week 2 developing and executing a comparable test running under Blender’s fluid simulation engine.

Resources:

Blender Tutorial - Realistic Fluid Simulation: https://www.youtube.com/watch?v=zmw-BTCbWMw

Cinema 4D Tutorial - Water simulation Animation: https://www.youtube.com/watch?v=JehbYBAZw7c

What does Day 1 look like?

Let’s just say I have a lot to learn.

Interactive Design Prototyping

THE TIME HAS COME TO…PUSH THE BUTTON

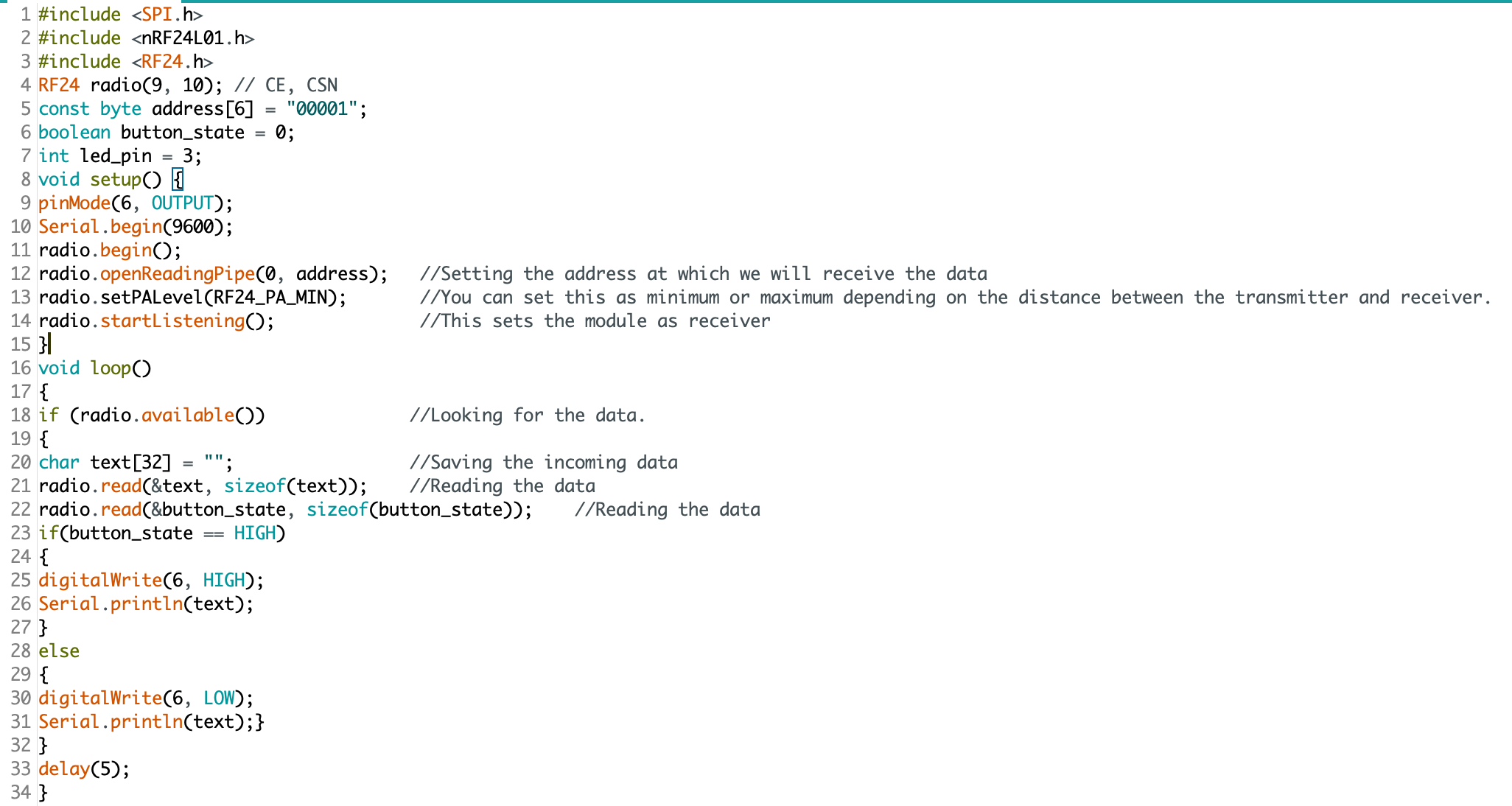

Wireless communication between Arduino #1 and #2

My current project in IxD Prototyping involves physical computing (i.e., “interactive systems that can sense and respond to the world around them.”) I have worked with Arduino before (Restricted Area, 2017) but this newest project is expected to have a daily use. In my head, I keep a long list of annoying technology interactions—this gets updated frequently. We are saturated with unsatisfying technology and devices that cause more problems than they solve. We have inconveniences stacked upon inconveniences, and if we were to step outside of this environment, you would inevitably conclude that most electronics are made to punish the buyers. I am looking to improve just one such interaction.

Back in 2012 I bought an HD video projector. If you love to watch movies, there is something magical about having “the big screen” at home. I love it. Do you know what I don’t love? Using an infrared remote control on a devices that is mounted above and behind me. Seriously, Epson: what where you guys (and yes, I’m assuming it was a team of men, with their dumb penises getting in the way of common sense) thinking?! The primary function of the remote control is to simply turn the projector on and off. I would gladly give up the remote control entirely if I could simply move the power button to the armrest of my couch. Instead, I must contort my arm in Kama Sutra fashion just to find the right angle to get the sensor to recognize the POWER-ON command from the remote.

Getty Images: the various methods for turning on an Epson HD Projector.

My girlfriend’s method to bypass the projector is more elegant: she retrieves a step-stool from our utility closet and presses the ON/OFF button on the projector chassis. This works well, but … well, let’s just say, it ruins the mood. I began to explore other options, and realized that the primary issue is that IR remotes are directional. The IR sensor is part of the assembly, and cannot be relocated. Arduino is capable of IR communication, it is also capable of RF communication. Radio frequency is far less dependent on line-of-sight, especially within the context of indoor and residential use. Imagine what WiFi would be like if it worked over infrared. Consider also that Apple abandoned their IR remote interface for the Mac.

Enter the Arduino

I found a few open source projects that utilize IR and RF communication:

https://learn.sparkfun.com/tutorials/ir-communication/all

https://www.electroschematics.com/ir-decoder-encoder-part-2-diy-38-khz-irtr-module/

https://learn.adafruit.com/using-an-infrared-library/hardware-needed

https://www.sparkfun.com/datasheets/Components/nRF24L01_prelim_prod_spec_1_2.pdf (PDF Warning)

https://www.deviceplus.com/arduino/nrf24l01-rf-module-tutorial/

https://forum.arduino.cc/index.php?topic=421081.0

https://howtomechatronics.com/tutorials/arduino/arduino-wireless-communication-nrf24l01-tutorial/

All of these resources are excellent. I want to call attention to one more link: https://create.arduino.cc/projecthub/muhammad-aqib/nrf24l01-interfacing-with-arduino-wireless-communication-0c13d4

I have a bone to pick with this one. Take a look at the wiring diagram:

Diagram created by /u/Muhammadaqibdutt

Note the LED pin-out for the receiver. This diagram shows the positive leg of the LED connecting to Pin 3

Now, lets take a look at the code:

The devil is in the details: “digitalWrite(6, HIGH)” condition turns the LED on. Pin 3 does nothing.

This made for some very “fun” troubleshooting. I’ve since ironed out all the kinks, and have successfully pirated the IR remote signal from an Epson brand projector (on loan from the Design Office at CMU), and have moved on to making an enclosure. Will I 3D print or laser cut? I have not yet decided.

Here is some sample code for my RF triggered IR emitter:

(NOTE: this code is just one half of the project, and by itself cannot do anything. You’ll also need IR and RF libraries to make this code work on your Arduino)

#include <SPI.h>

#include <nRF24L01.h>

#include <RF24.h>

#include <IRLibAll.h>

RF24 radio(9, 10); // CE, CSN

const byte address[6] = "00001";

boolean button_state = 0;

int led_pin = 3;

IRsend mySender;

void setup() {

pinMode(6, OUTPUT);

Serial.begin(9600);

radio.begin();

radio.openReadingPipe(0, address);

radio.setPALevel(RF24_PA_MIN);

radio.startListening();

}

void loop()

{

if (radio.available())

{

char text[32] = "";

radio.read(&text, sizeof(text));

radio.read(&button_state, sizeof(button_state));

if (button_state == HIGH)

{

digitalWrite(6, HIGH);

Serial.println(text);

//Arduino Remote On/Off button code

mySender.send(NEC, 0xffa25d);

}

else

{

digitalWrite(6, LOW);

Serial.println(text);

}

}

delay(5);

}Evaluating Tools for Information Architecture

OmniGraffle for Mac

From the website:

OmniGraffle is a comprehensive, yet easy to use diagramming and drawing application. Drag and drop to create wireframes, flow charts, network diagrams, UI mockups, family trees, office layouts, and more. OmniGraffle 7 comes with plenty of features to get started in Standard. OmniGraffle Pro has everything in Standard, plus features suited specifically for folks that make a living designing or working with graphics—things like Shared Layers, Artboard Layers, Non-Destructive Shape Combinations, Blending Modes and Fill Effects, Visio support, SVG export, and more.

Weaknesses:

Price - even their educational license for students costs $89.99. They do offer a free trial, but it only works for 14 days

Compatibility - not easy to transfer projects to other platforms (i.e., Visio)

Learning curve - many reviews complain that it is difficult to learn how to use

xSort for Mac

From the website:

Visual environment simulating a table with cards (and outline view).

Supports open, semi-open and closed exercises.

Supports sub-groups (participants can put groups inside groups).

Control every aspect of the exercise(sorting type, cards placement, etc.).

Statistical results (cluster tree, distance table, etc.) updated in real time.

Displays individually all the info related to an individual session.

Easily select the sessions you want to use based on different criterias.

Create, read, print and export reports with a single click.

Lock the document so that a participant may do only one session.

Fully integrated with Mac (Intel and PowerPC-based Macs).

Price - Free

Weaknesses:

32-bit only (does not work with latest version of MacOS

No support

Has not been updated in years

PowerMapper Desktop

From the website: