This week I continued working on 3D asset creation. My basic approach so far has been to start with a simplified geometry from Fusion 360, then export that design as an .FBX (Autodesk Maya file format), import the FBX to Blender for UV mapping, material, and motion rigging. There’s probably a more streamline way to generate this content, but from a feasibility standpoint, this approach allows me to be flexible and to use different tools for discrete tasks. This week I will be importing these combined assets into Unreal Engine.

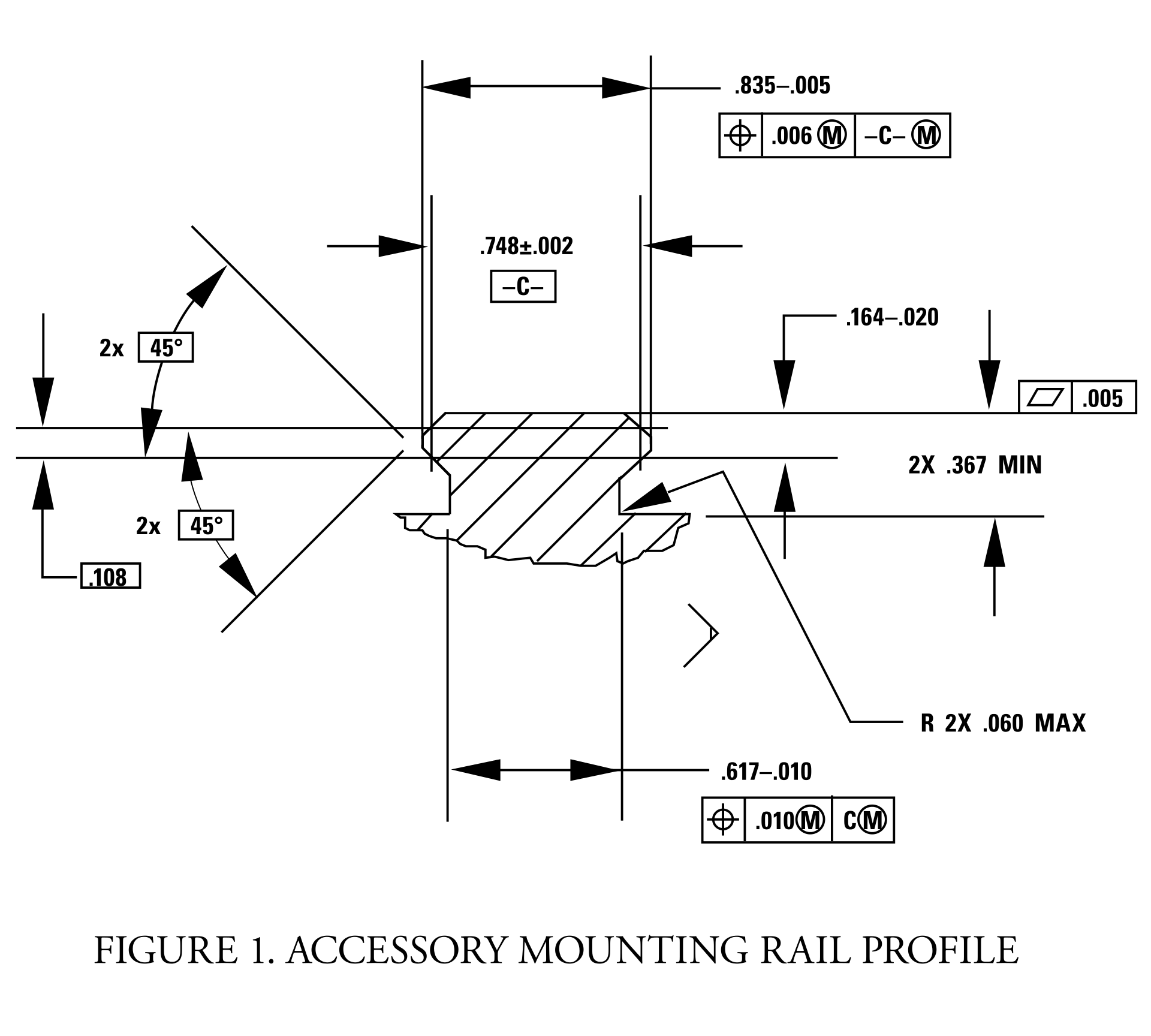

This week was also my final week for the term at PCC, where I have enrolled in their online course for Advanced Fusion 360. I’ve been working on a group project, and designing assemblies for use in a solar projector system. The design is based on COTS (commercial off-the-self parts), which required me to draft profiles to meet engineering specifications.

Picatinny rail specification downloaded from wiki-commons.

The final deliverables are due this coming Saturday, and there is still a good bit of work to be done before we get graded on this project. Nevertheless, I am very pleased with the current state of things. I’ve been using Quixel Mixer to produce more realistic rendering material than the library included with Fusion 360. I say, “more” realistic because Fusion 360 already has some excellent materials. Take a look at this rendering of a Bushnell 10x42 monocle (one of the components in this project):

I haven’t yet added any details, but as you can see, the rubberized exterior and textured plastic hardware are fairly convincing. Now, take a look at the mounting hardware rendered with Quixel textures:

An important component in photorealism is the inclusion of flaws. Real life objects are never perfectly clean, perfectly smooth, or with perfect edges. Surface defects, dirt, scratches, and optical effects play an important role in tricking the eye into believing a rendering. With Quixel Mixer, it is possible to quickly generate customized materials. While this product is intended for use with Unreal Engine and other real-time applications, it does an amazing job when coupled with a physical based renderer.

Picatinny rail set with hardware and bracket.

I’m excited to see what can be done with these materials in a real-time engine, especially given the advanced features of Unreal Engine 5. Fusion 360’s rendering is CPU driven, whereas Unreal is GPU accelerated. With both Nvidia and AMD now selling GPUs with built-in raytracing support, it won’t be long before we see applications that offer simultaneous photorealism rendering within modeling workflows.

Additionally, GPUs also work extremely well as massively parallel computing units, ideal for physical simulations. This opens up all kinds of possibilities for real-time simulated stress testing and destructive testing. It wasn’t that long ago that that ASCI Red was the pinnacle of physical simulation via supercomputer. Today, comparable systems can be purchased for less than $2,000.

Of course, this price assumes you can buy the hardware retail. The current chip shortage has inflated prices more than 200% above MSRP. Fortunately, with crypto markets in decline and businesses reopening as vaccination rates exceed 50% in some regions, there are rays of hope for raytracing-capable hardware being in hand soon.