We began this week with a guest lecture from Adam Cowart, a PhD candidate in the transition design program. He introduced us to the concept of CLA (Causal Layered Analysis). We used this framework to better understand the landscape of our problem space at Portland Public Schools. Adam described different facets of the problem space through the lens of “litany filters.” To recognize what futures are feasible, we need to understand the triad of history, present, and future, and what elements in our landscape pull, push, or weigh down progress.

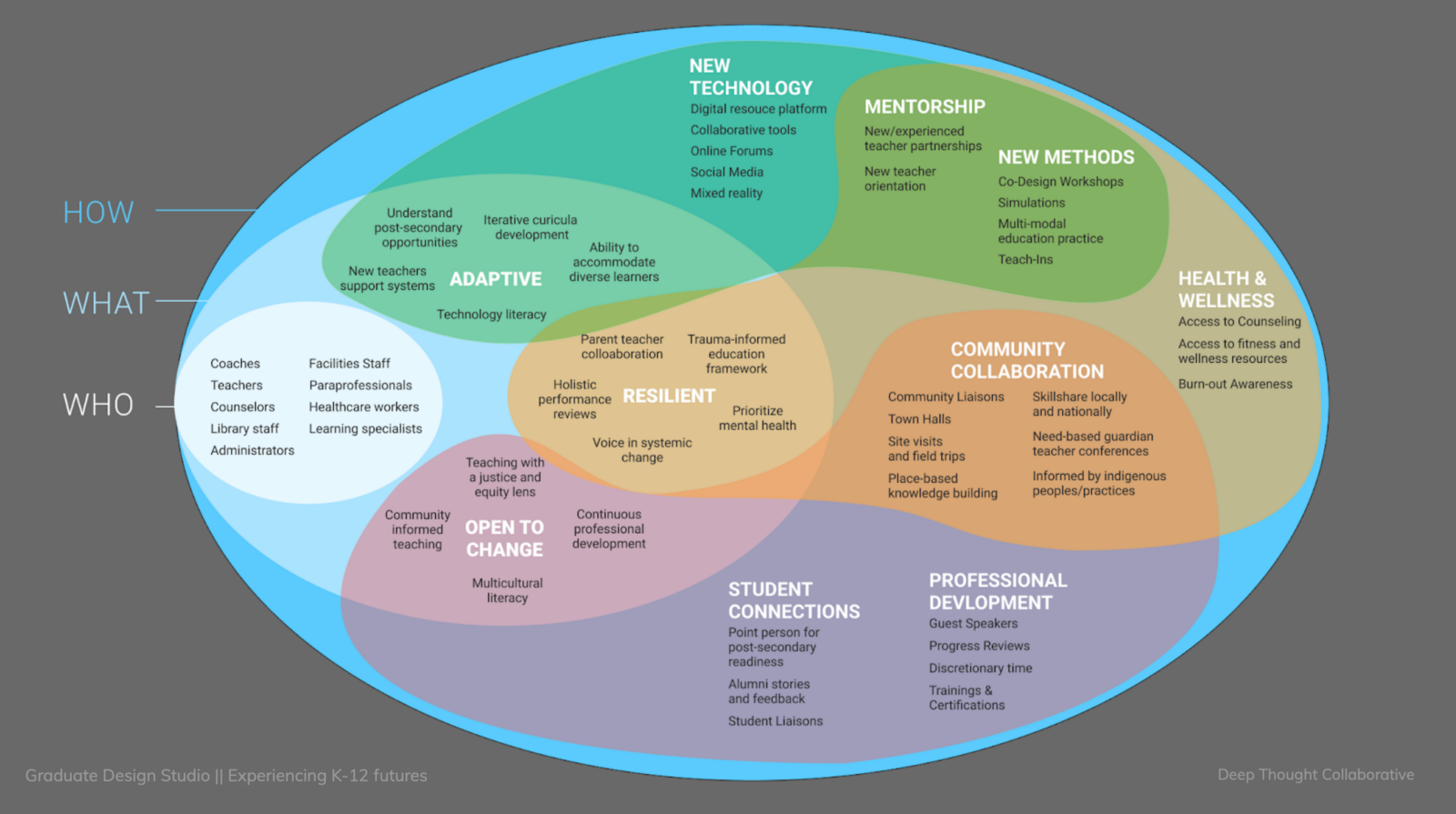

We took some time in class to reframe our insights through this framework, and began synthesis of potential elements to build a bridge toward the future vision created by Prospect Studio. This process began slowly, but after some heavy lifting we began filling out the diagram with great enthusiasm! It was refreshing to revisit our secondary research (which was already categorized under a STEEP-V framework). It was revealing to see visually how much further we have advanced our understanding of this problem space since literature review and background reading.

Outside of class, our team was busier than ever — working to adapt and overcome the obstacles we’ve encountered in our generative research phase has not been easy. I’ve struggled to support these efforts. The external factors of my personal and professional life have been an ongoing source of strain. I feel so much gratitude to the support and encouragement I’ve received from this team, and this week I felt a great deal of pressure to reciprocate.

Sample of generative research protocols

This effort to pay back the generosity I received (when I needed it most) began with a complete/comprehensive draft of our protocols for generative research, and the specifications for our workshop. Working with Carol, we delivered this to the team ahead of schedule. It was necessary for us to draft new protocols and workshop exercises to include a broader audience, outside of Portland Public Schools. We found that last week was somewhat of a dead end for seeking participation from our intended stakeholders (administrators and educators at PPS).

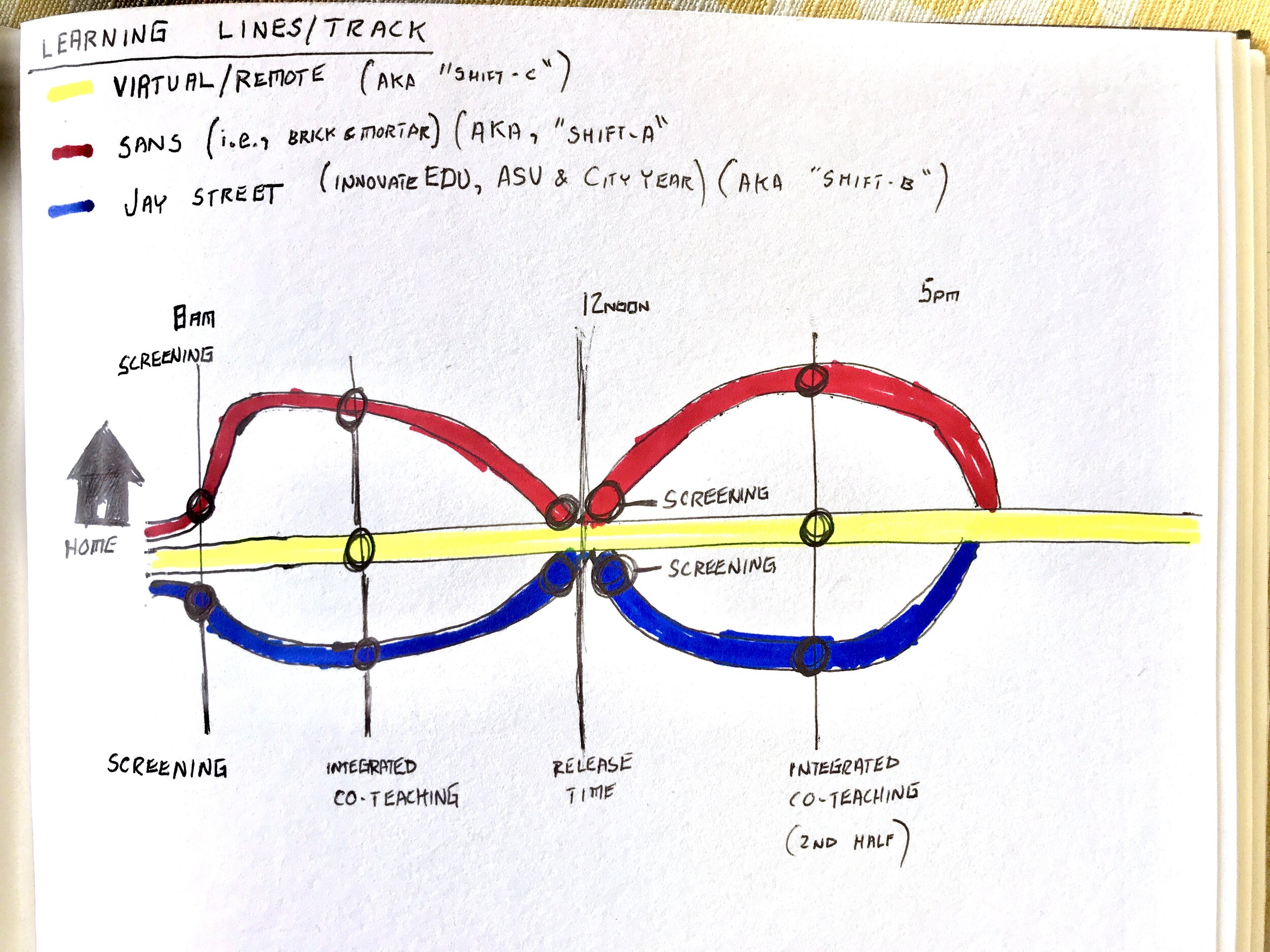

For our workshop, we wanted to know how different stakeholders perceive their relationships with counterparts, learn what different stakeholders prioritize and why, gain deeper understanding of how educators think about the future of public education, and to explore and define preferred futures.

We conducted three separate workshop sessions with educators outside of PPS. This included neighboring districts of PPS (Gresham-Barlow), as well as out-of-state educators. This approach allowed us to glean insights regarding that which is common in the US public school system, and that which is more specific to Portland. While this adaptation is not without its risks to skewed data, it is far more preferable that to remain without any additional insights beyond our primary research activities.

Screenshots of workshop activity

This was my first experience with executing participatory design with stakeholders and it has been such a rollercoaster of emotions. Since Carol and I worked on the protocol together, it was only logical that we also create the visual and interactive components for the workshop. We iterated on our initial concept by practicing with our own team, with each member taking a turn roleplaying as a participant. This helped us to work out the kinks and refine details before putting anything in the hands of our participants.

The first workshop with a real participant was very revealing. Having access to their thought process in real time, their visual associations, priorities, and ideas about the future were peeled back in layers, digging deeper into their lived experiences than we ever got through primary research and conversational interviews. Even the generation of simple sketches gave us glimpses into their inner worlds. I now question how important it was to conduct traditional interviews in the first place. Workshops are just so much more dynamic and active than interviews, and I consistently came away feeling more connected to the participants and their experiences.

This weekend was highly reflective. With new insights in hand, we spent over five hours evaluating what we discovered. There was so much for us to consider and it was only once we had the chance to pick it all apart together as a team that we could begin to make sense of it all. Many of our initial assumptions were blown out of the water. Our newfound perspective gave us a real sense of how important relationships are in the field of teaching. We also learned that technology is probably the least important factor for educators — with the exception of a desire for students to have high-speed internet at home, there was little to no interest in improving access to technology generally.

I’m still getting used to applying so many different approaches and methods so quickly. I feel like I’m only occasionally operating with a sense of clarity. There has been prolonged fuzziness that’s difficult to describe or ignore. It seems as though new insights provoke deeper questioning, while offering little in the way of certainty. I think this is just the experience of progressively revealing collective and individual ignorance. Before learning enough to act decisively, we must first gaze into the vast abyss of what we still do not know.